The Alt-Right Has Its Own Comedy TV Show On A Time Warner Network

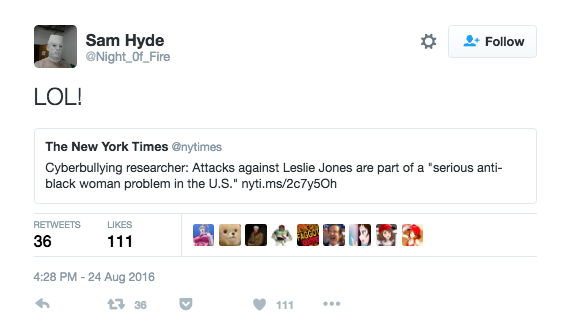

@Night_0f_Fire is in almost every way a quintessential alt-right Twitter user. He supports Donald Trump. He hates Hillary Clinton and questions her health. He retweets the full spectrum of the movement's icons, from macho culture warriors like Mike Cernovich and Pax Dickinson to conspiracy mongers like Alex Jones to overt racists. He calls Lena Dunham a “fat pig,” cheers the demise of Larry Wilmore&039;s Nightly Show, and bemoans the presence of “burkhas in video games.” He mocks Black Lives Matter. His tweets are fully in line with the wildly prolific online movement that has spawned Milo Yiannopoulos, triple parentheses to demarcate Jews, and the term “cuckservative.”

In fact, there&039;s really only one thing that separates @Night_0f_Fire — real name Sam Hyde — from the many other members of the angry, pro-Trump internet movement that grew out of Gamergate into a force capable of roiling American popular and political culture: Hyde has his own television show on Cartoon Network.

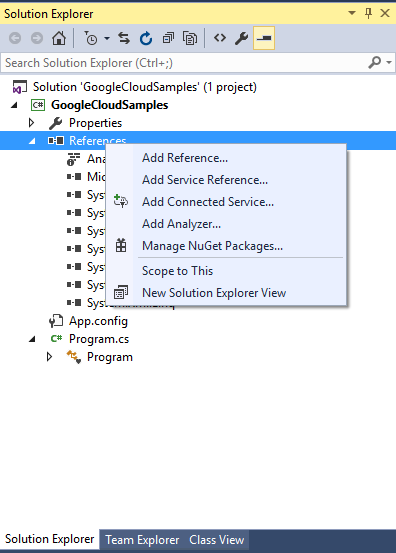

Million Dollar Extreme Presents: World Peace airs every Saturday at 12:15 a.m. on Adult Swim, the 8 p.m. to 6 a.m. incarnation of Cartoon Network famous for its stoner-y animation and sketch comedy. World Peace, which will air its fourth episode this weekend, is the latter. It&039;s the first wide exposure for MDE, a comedy group comprising Hyde and two collaborators that has gained a cult following on the internet and a reputation for being hugely offensive.

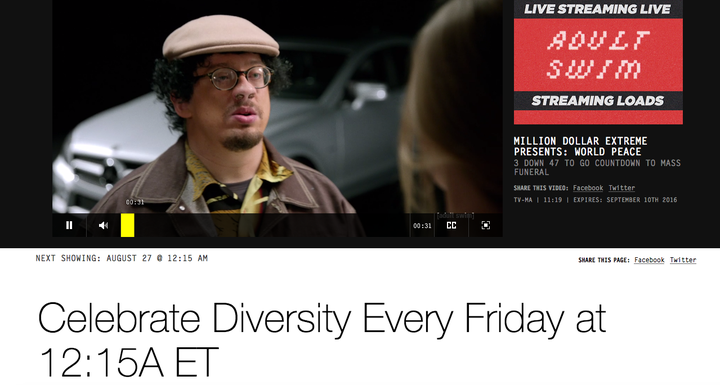

Promotional material for World Peace winks at Hyde&039;s alt-right fans. “Celebrate Diversity Every Friday at 12:15A ET,” reads the tagline on the Adult Swim website. Press copy announcing the show promised that “World Peace will unlock your closeted bigoted imagination, toss your inherent racism into the burning trash, and cleanse your intolerant spirit with pure unapologetic American funny_com.” Though none of the three episodes that have aired so far have touched on politics or the alt-right, they have hardly been in good taste. The most recent episode of the show opened with Hyde, in blackface, speaking in exaggerated black vernacular for three minutes.

According to Showbuzz Daily, World Peace ranked number two among original cable shows on the night of its premiere, with more than a million viewers.

Adult Swim

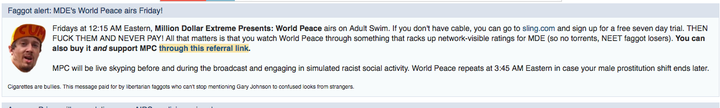

The alt-right — which will attain its greatest notoriety yet when Hillary Clinton gives a speech today denouncing it — has noticed the show. On Twitter, a steady stream of pro-Trump troll accounts have anointed World Peace “the only non cucked TV show” and “redpilled TV” that “will save the west.” A subreddit devoted to the show, moderated by someone claiming to be Hyde, describes itself with the ubiquitous Trump hashtag as “the Best Damn Internet Community™ on God&039;s green earth. #MAGA.” My Posting Career, the 4chan-meets-far-right-politics forum that helped coin “cuckservative,” is running a special “Faggot alert” at the top of the page alerting readers that World Peace airs every Friday:

Reached via phone, Hyde attributed all of the tweets and Reddit posts to “his assistant.” Asked if he was a member of the alt-right, Hyde responded with a question: “Is that some sort of indie book store?”

Turner, which owns Cartoon Network and Adult Swim, responded to a request for comment by forwarding a written statement from an Adult Swim spokesperson:

“Adult Swim’s reputation and success with its audience has always been based on strong and unique comedic voices. Million Dollar Extreme’s comedy is known for being provocative with commentary on societal tropes, and though not a show for everyone, the company serves a multitude of audiences and supports the mission that is specific to Adult Swim and its fans.”

For the Carnegie Mellon and RISD–educated Hyde, World Peace is the latest act in a years-long career of making people uncomfortable. Though MDE has been publishing videos since at least 2009 (an early one is titled “old faggot”), Hyde is probably most famous for a 2013 stunt in which he hijacked a TEDx symposium in Philadelphia and gave a nonsensical presentation called “2070 Paradigm Shift,” to polite applause. More often — and surely the major reason for his popularity among the alt-right — he exploits, sometimes cruelly, cultural sensitivities around race, gender, and sexual orientation.

At a 2013 comedy event in Brooklyn, he performed a shocking set, a recording of which became a minor viral hit titled “Privileged White Male Triggers Oppressed Victims, Ban This Video Now and Block Him.” Hyde began by mocking the “hipster faggot” audience — at which point a few onlookers immediately left — then removed a piece of paper from his back pocket and proceeded to read 15 minutes of anti-gay pseudo science (“homosexuality is the manifestation of intense perversion and antisocial attitudes”) and outright hate speech (“next time you see a crazy gay person maybe it&039;s not because they were bullied, maybe it&039;s not because of homophobia … maybe it&039;s just because of their faggot brain that&039;s all fucked up”). He concluded by blaming positive portrayals of gay people on television on the “ZOG (Zionist Occupation Government) media machine destroying the family.” At the end of the set, he went outside to argue with some of the people who had left.

Last year, BuzzFeed News reported that a gun- and knife-brandishing internet personality named Jace Connors — who became notorious for claiming to crash his car while en route to the home of Brianna Wu, one of the most public victims of Gamergate – was actually the work of a member of MDE named Jan Rankowski, who created the Connors “character” with input from Hyde.

And last fall, Hyde and fellow MDE member Charls Carroll showed up near the Yale campus in New Haven bearing signs reading “All Lives Matter” and “No More Dead Black Children,” then proceeded to film a highly uncomfortable 15-minute video called “Yale Lives Matter” in which Hyde, among other things, lectures a black Apple store security guard that he is “playing a part in an oppressive system,” harangues the black employee of a preppy clothes store for selling “slave owner clothes,” and asks two young white men if they “killed any minorities today.”

This year, Hyde — or his assistant — seems to have decided to cast his lot in with the alt-right.Though Hyde has deleted all his tweets from before the new year, since then he&039;s been remarkably consistent in engaging with the major concerns of and personalities in the movement.

The alt-right, which idealizes offensive speech as a principled transgression against a censorious liberal culture, is a natural fit for MDE&039;s comedy, which combines nerdy references to anime and video games with the sinister goofiness of Tim and Eric, the anti-PC mean streak of pre-corporate Vice, and the terminal irony of meme culture. Indeed, MDE and Hyde specifically have been beloved on 4chan, one of the alt-right&039;s incubators, for years.

If Hyde isn&039;t quite of shitlord culture, he most certainly plays along. Earlier this year, Hyde became the possibly witting subject of a series of 4chan-perpetrated hoaxes that named him as the suspect in a series of mass shootings. A first cut of World Peace, aired online as part of an Adult Swim series called Development Meeting, featured a logo that fans quickly figured out was a copy of a symbol that Aurora shooter James Holmes scribbled in his notebooks. (It was cut from the actual broadcast.)

All of which raises the feeling that World Peace is one massive in-joke, designed to signify to a group of people online for whom the limits of irony have been misplaced and forgotten; identity content for the worst trolls in the world. After being revealed as the Jace Connors character, Jan Rankowski told BuzzFeed News that the videos had been a satire about “over-the-top, super-hyper-macho armed Gamergater.”

It&039;s a trap just to read Sam Hyde literally — he&039;s built a career out of making fun of people who take his speech too seriously. But that has not stopped Hyde&039;s alt-right admirers from trying to divine his true politics, in the same way they scan his show for secret messages. The closest they&039;ve come is a post by Hyde — or his assistant — on the MDE subreddit from late last year in which he – or his assistant — describes himself as basically a libertarian who believes that “we&039;re putting Western Civ on the alter [sic] as a sacrifice to white guilt because we&039;re worried some frizzy-haired Afro transsexual will wag his finger at us” and that “whites need to regain some sort of cohesive tribal self-interest and identity right now just like everybody else has.”

Whether or not this is genuine is basically unknowable, as Hyde never publicly breaks character. (Though he did add over the phone that “my assistant does a good job.”) It&039;s also beside the point for everyone except the converted — including executives at Adult Swim. Because it&039;s also a trap to not see the seriousness of what Hyde and co. are doing, even if they&039;re LOLiarng along the way and being disingenuous. Indeed, when the consequences of a culture involve the serial harassment and illegal publication of explicit photographs of a black actress because she had the temerity to stick up for herself, does it really matter whether the people having a laugh over it are in character?

Quelle: <a href="The Alt-Right Has Its Own Comedy TV Show On A Time Warner Network“>BuzzFeed