The co-founders of MIT spinout KGS Buildings have a saying: “All buildings are broken.” Energy wasted through faulty or inefficient equipment, they say, can lead to hundreds of thousands of dollars in avoidable annual costs.

That’s why KGS aims to “make buildings better” with cloud-based software, called Clockworks, that collects existing data on a building’s equipment — specifically in HVAC (heating, ventilation, and air conditioning) equipment — to detect leaks, breaks, and general inefficiencies, as well as energy-saving opportunities.

The software then translates the data into graphs, metrics, and text that explain monetary losses, where it’s available for building managers, equipment manufacturers, and others through the cloud.

Building operators can use that information to fix equipment, prioritize repairs, and take efficiency measures — such as using chilly outdoor air, instead of air conditioning, to cool rooms.

“The idea is to make buildings better, by helping people save time, energy, and money, while providing more comfort, enjoyment, and productivity,” says Nicholas Gayeski SM ’07, PhD ’10, who co-founded KGS with Sian Kleindienst SM ’06, PhD ’10 and Stephen Samouhos ’04, SM ’07, PhD ’10.

The software is now operating in more than 300 buildings across nine countries, collecting more than 2 billion data points monthly. The company estimates these buildings will save an average of 7 to 9 percent in avoidable costs per year; the exact figure depends entirely on the building.

“If it’s a relatively well-performing building already, it may see lower savings; if it’s a poor-performing building, it could be much higher, maybe 15 to 20 percent,” says Gayeski, who graduated from MIT’s Building Technology Program, along with his two co-founders.

Last month, MIT commissioned the software for more than 60 of its own buildings, monitoring more than 7,000 pieces of equipment over 10 million square feet. Previously, in a year-long trial for one MIT building, the software saved MIT $286,000.

Benefits, however, extend beyond financial savings, Gayeski says. “There are people in those buildings: What’s their quality of life? There are people who work on those buildings. We can provide them with better information to do their jobs,” he says.

The software can also help buildings earn additional incentives by participating in utility programs. “We have major opportunities in some utility territories, where energy-efficiency has been incentivized. We can help buildings meet energy-efficiency goals that are significant in many states, including Massachusetts,” says Alex Grace, director of business development for KGS.

Other customers include universities, health-care and life-science facilities, schools, and retail buildings.

Equipment-level detection

Fault-detection and diagnostics research spans about 50 years — with contributions by early KGS advisors and MIT professors of architecture Les Norford and Leon Glicksman — and about a dozen companies now operate in the field.

But KGS, Gayeski says, is one of a few ventures gathering “equipment-level data,” gathered through various sensors, actuators, and meters attached to equipment that measure functionality.

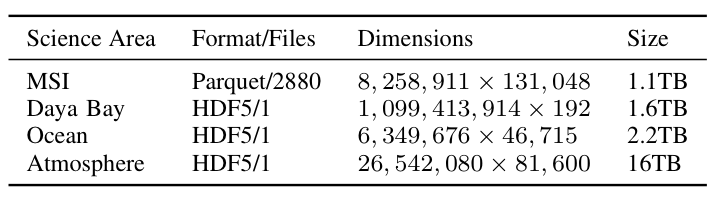

Clockworks sifts through that massive store of data, measuring temperatures, pressures, flows, set points, and control commands, among other things. It’s able to gather a few thousand data points every five minutes — which is a finer level of granularity than meter-level analytics software that may extract, say, a data point every 15 minutes from a utility meter.

“That gives a lot more detail, a lot more granular information about how things are operating and could be operating better,” Gayeski says. For example, Clockworks may detect specific leaky valves or stuck dampers on air handlers in HVAC units that cause excessive heating or cooling.

To make its analyses accurate, KGS employs what Gayeski calls “mass customization of code.” The company has code libraries for each type of equipment it works with — such as air handlers, chillers, and boilers — that can be tailored to specific equipment that varies greatly from building to building.

This makes Clockworks easily scalable, Gayeski says. But it also helps the software produce rapid, intelligent analytics — such as accurate graphs, metrics, and text that spell out problems clearly.

Moreover, it helps the software to rapidly equate data with monetary losses. “When we identify that there’s a fault with the right data, we can tell people right away this is worth, say, $50 a day or this is worth $1,000 a day — and we’ve seen $1,000-a-day faults — so that allows facilities managers to prioritize which problems get their attention,” he says.

KGS Buildings’ foundation

The KGS co-founders met as participants in the MIT entry for the 2007 Solar Decathlon — an annual competition where college teams build small-scale, solar-powered homes to display at the National Mall in Washington. Kleindienst worked on lighting systems, while Samouhos and Gayeski worked on mechanical design and energy-modeling.

After the competition, the co-founders started a company with a broad goal of making buildings better through energy savings. While pursuing their PhDs, they toyed with various ideas, such as developing low-cost sensing technology with wireless communication that could be retrofitted on to older equipment.

Seeing building data as an emerging tool for fault-detection and diagnostics, however, they turned to Samouhos’ PhD dissertation, which focused on building condition monitoring. It came complete with the initial diagnostics codes and a framework for an early KGS module.

“We all came together anticipating that the building industry was about to change a lot in the way it uses data, where you take the data, you figure out what’s not working well, and do something about it,” Gayeski says. “At that point, we knew it was ripe to move forward.”

Throughout 2010, they began trialing software at several locations, including MIT. They found guidance among the seasoned entrepreneurs at MIT’s Venture Mentoring Service — learning to fail fast, and often. “That means keep at it, keep adapting and adjusting, and if you get it wrong, you just fix it and try again,” Gayeski says.

Today, the company — headquartered in Somerville, Mass., with 16 employees — is focusing on expanding its customer base and advancing its software into other applications. About 180 new buildings were added to Clockworks in the past year; by the end of 2014, KGS projects it could deploy its software to 800 buildings.

“Larger companies are starting to catch on,” Gayeski says. “Major health-care institutions, global pharmaceuticals, universities, and [others] are starting to see the value and deciding to take action — and we’re starting to take off.”

Liberating data

By bringing all this data about building equipment to the cloud, the technology has plugged into the “Internet of things” — a concept where objects would be connected, via embedded chips and other methods, to the Internet for inventory and other purposes.

Data on HVAC systems have been connected through building automation for some time. KGS, however, can connect that data to cloud-based analytics and extract “really rich information” about equipment, Gayeski says. For instance, he says, the startup has quick-response codes — like a barcode — for each piece of equipment it measures, so people can read all data associated with it.

“As more and more devices are readily connected to the Internet, we may be tapping straight into those, too,” Gayeski says.

“And that data can be liberated from its local environment to the cloud,” Grace adds.

Down the road, as technology to monitor houses — such as automated thermostats and other sensors — begins to “unlock the data in the residential scale,” Gayeski says, “KGS could adapt over time into that space, as well.”

Quelle: Massachusetts Institute of Technology