Ready To Die On Mars? Elon Musk Wants To Send You There

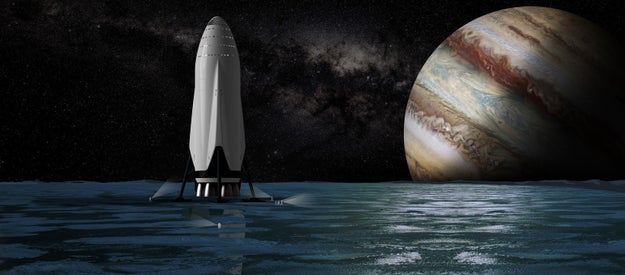

This is what SpaceX's Interplanetary Transport System, which Elon Musk hopes will take people to Mars one day, would look like.

SpaceX / Via Flickr

Elon Musk has said he wants to die on Mars — “just not on impact.” In a speech on Tuesday, Musk outlined how his company Space Exploration Technologies (SpaceX), which has yet to even send a human into orbit, hopes to shuttle people to Mars to forge a self-sustaining civilization within 40 to 100 years.

What the billionaire did not explain, however, is how the people he plans to shuttle there would survive on a planet no human has ever set foot on. In 2002, Musk founded SpaceX with the goal of “making life multi-planetary.” When Musk teased his intent to discuss a plan to colonize Mars in April, he warned, “it’s going to sound pretty crazy.” It does.

“Are you prepared to die? If that’s ok, then you’re a candidate for going.”

“Are you prepared to die? If that’s ok, then you’re a candidate for going,” Musk said. Would he become the first man on Mars himself? Probably not. “I’d definitely need to have a good succession plan because the probability of death is really high on the first mission. And I’d like to see my kids grow up.”

But for those who are willing to risk death – Musk would not advise sending your children – he pulled up a presentation slide that showed SpaceX’s timeline to begin flights to Mars in 2023. The cost of bringing a person to Mars right now is about $10 billion, he said. And his goal is to bring that figure down to $200,000, the median price of a home in the US, and hopefully even lower, to $140,000. Who’s going to pay for it? “Ultimately, this is going to be a huge public-private partnership,” Musk said. He also said he will fund the project with his own money. (Forbes estimates his net worth at $11.7 billion.)

The speech marks a big moment for Musk, and casts aside his troubles on Earth: Tesla, his electric car company, is under federal investigation after a driver&039;s fatal crash while operating one of its cars with its Autopilot system engaged. Several shareholders are suing Tesla as well, after the company made an offer to purchase SolarCity, the solar energy company Musk is chairman of. Not to mention the fact that a SpaceX rocket carrying a satellite for Facebook’s Internet.org initiative exploded at its launch site, Cape Canaveral Air Force station, earlier this month.

“There’s a tremendous opportunity for anyone who wants to go to Mars to create something new…Everything from iron refineries to the first pizza joint.”

“If you’re an explorer and you want to be on the frontier and push the envelope and be where things are super exciting, even if it’s dangerous, that’s really who we’re appealing to here,” Musk said. He compared SpaceX’s plans to shuttle people to Mars in spaceships that could fit 100 (and eventually 200) people to the construction of the Union Pacific Railroad, which was built in the late 1800s to connect about two dozen western states. “There’s a tremendous opportunity for anyone who wants to go to Mars to create something new and bold, the foundations of a new planet. Everything from iron refineries to the first pizza joint, things on Mars that people can’t even imagine today that might be unique to Mars,” he said.

People might not be able to imagine them because humans have yet to set foot on Mars. For 40 years, NASA has been sending out rovers, orbiters and landers to learn more about the planet. Scientists and researchers have spent lengthy periods of time in cold, dangerous environments like Antarctica, and inside barren volcano slopes in Hawaii, to simulate life on the Red Planet. But dreaming big is perfectly in character for Musk, who started SpaceX in 2002. In 2012, the company’s Dragon rocket became the first commercial spacecraft to deliver cargo safely to the International Space Station for NASA and return to Earth. Since then, it’s been landing (and failing to land) reusable rockets on barges in the middle of the ocean.

The company released a video of its new rocket, which would be the biggest rocket ever, as part of the presentation. It’s called BFR – short for “big fucking rocket.” For scale, Musk pulled up an image of it on the screen behind him. This is what it looked like:

That small man to the right, just a blip, is Elon Musk. He projected the BFR on the screen behind him.

Scott Hubbard, formerly the director of NASA Ames Research Center and its “Mars czar,” told BuzzFeed News that building such a rocket would be an engineering feat. “That&039;s way beyond anything anyone&039;s ever built before,” he said. The individual components of Musk&039;s engineering goals are very optimistic, but not technologically impossible, Hubbard said – it&039;s not like Musk said he&039;s trying to build a transporter beam.

“The scale of it, though, is so much larger than anything NASA&039;s ever done, and I am skeptical about the timeline. The specifics require engineering development that has yet to be done,” Hubbard said. “The history of launch vehicles is littered with failures…rocket science is called rocket science for a reason.”

In a statement after Musk’s presentation finished, NASA said it “applauds all those who want to take the next giant leap – and advance the journey to Mars. We are very pleased that the global community is working to meet the challenges of a sustainable human presence on Mars.”

“Rocket science is called rocket science for a reason.”

Still, NASA’s timeline for putting humans on Mars is several years out from Musk’s, and its plans are much less grandiose.

Ellen Stofan, chief scientist at NASA, told BuzzFeed News prior to Musk’s announcement that the agency sees value in its partnership with SpaceX and that the company can help accelerate the dream of getting humans to Mars. But the biggest hindrance is figuring out how to keep humans healthy and sustain life there. Humans lose bone density in space, and radiation levels on Mars are so high that “for humans to stay on Mars for any duration, you’d have to be living underground.”

“When you think about large-scale movement of humans to Mars, it’s just not practical or desirable,” Stofan said. “I think our timeline of aiming to get humans to mars in the early 2030s, say 2032, is the one that gets people there on a path where we can feel comfortable that we can get them there safely, and get them home safely.”

Then there are other human issues, like one that an audience member asked Musk in the Q&A after his presentation. He said he came up with the question while at Burning Man, with no plumbing, in a hot, dusty Nevada desert that got chilly in the evenings. Will Mars have toilets?

“Is this what Mars is going to be like? Just a dusty, waterless shit storm?”

“There was a lot of shit, and there was no water to take it into the rivers,” he told Musk. “Is this what Mars is going to be like? Just a dusty, waterless shit storm?”

Musk clearly wasn’t prepared for the question.

After all, his presentation touched only lightly on how people would live upon getting to Mars. He presented a simple solution as to how people would be fed: “We can grow plants on Mars just by compressing the atmosphere.”

John Logsdon, the founder and former director of the Space Policy Institute at the George Washington University in DC, said Musk’s presentation lacked details on how any of his goals would be funded, and that it left “lots of open technical issues.”

“This is very much a vision rather than a detailed plan,” Logsdon said. “We need bold visions for anything to happen.”

Quelle: <a href="Ready To Die On Mars? Elon Musk Wants To Send You There“>BuzzFeed