Plugins are the building blocks and the rocket fuel for your WordPress.com website. They can help make your site faster and easier to manage but also bring essential elements to your fingertips. From email opt-ins and contact forms, to SEO, site speed optimization, calendars, and booking options — the list is nearly endless.

If you can imagine it, there’s a high likelihood that there’s a plugin to help you accomplish whatever your endeavor on your WP.com website.

And because of the vast community of WordPress developers, there are always new plugins being added to our marketplace. To better help you select the plugins for your business or passion, we are listing three of our hand-picked, most popular premium plugins below, in addition to several others, recently added to our marketplace.

WooCommerce Bookings

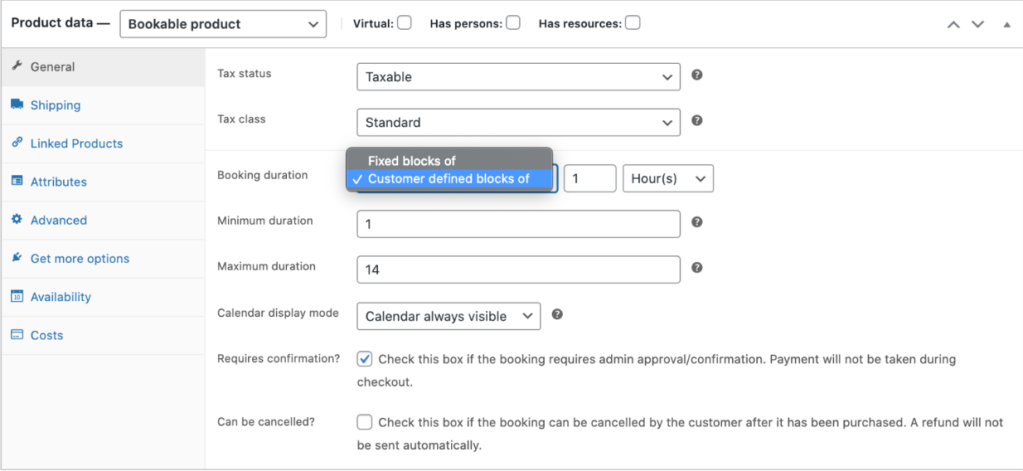

This invaluable plugin allows customers to book appointments, make reservations, or rent equipment. Both you and your customers can save valuable time since there’s no need for phone calls, emails, or other forms of communication to handle bookings. Prospects simply fill out the information themselves.

The benefits of WooCommerce Bookings are hard to overstate. For example, you can:

Define set options, like fixed time slots for a class, appointment, or guided tourLet customers choose the times that work best by giving them the flexibility to book whatever range they need, like checking into a hotelSet certain time periods as off limits and un-bookable, providing yourself a buffer between bookings

Perhaps best of all, the plugin integrates seamlessly with your Google calendar. Use the calendar view to see how your day or month is shaping up. Update existing bookings or availability, or filter to view specific services or resources. And if you have customers who insist on calling in to make bookings the old-fashioned way, you can add them manually from the calendar while you’re on the phone.

Whether you’re running a small bed and breakfast, a fishing guide service, or anything in between, WooCommerce Bookings can give you back valuable time, ensuring your customers have a friction-free booking process, which allows you to focus your energies elsewhere.

View the live demo here.Purchase WooCommerce Bookings

WooCommerce Subscriptions

With WooCommerce Subscriptions, customers can subscribe to your products or services and then pay for them at the frequency of their choosing — weekly, monthly, or even annually. This level of freedom can be a boon to the bottom line, as it easily sets your business up to enjoy the fruits of recurring revenue.

Better yet, WooCommerce Subscriptions not only allows you to create and manage products with recurring payments, but you can also introduce a variety of subscriptions for physical or virtual products and services, too. For example, you can offer weekly service subscriptions, create product-of-the-month clubs, or even yearly software billing packages.

Additional features include:

Multiple billing schedules available to suit your store’s needsIntegrates with more than 25 payment gateways for automatic recurring paymentsAccessible through any WooCommerce payment gateway, allowing for manual renewal payments along with automatic email invoices and receiptsPrevents lost revenue by supporting automatic rebilling on failed subscription payments

Additionally, this plugin offers built-in renewal notifications and automatic emails, which makes you and your customers aware of subscription payments being processed. Your customers can also manage their own subscriptions using the Subscriber’s View page. The page also allows subscribers to suspend or cancel a subscription, change the shipping address or payment method for future renewals, and upgrade or downgrade.

WooCommerce Subscriptions really do put your customers first, giving them the control they want and will appreciate while allowing you to automate a process and experience that saves time and strengthens your relationship with customers.

Learn more and purchase WooCommerce Subscriptions

AutomateWoo

High on every business owner’s list of goals is the ability to grow the company and earn more revenue. Well, AutomateWoo makes that task much simpler. This powerful, feature-rich plugin delivers a plethora of automated workflows to help you grow your business.

With AutomateWoo, you can create workflows using various triggers, rules, and actions, and then schedule them to run automatically.

For example, you can set up abandoned cart emails, which have been shown to increase the chance of recovering the sale by 63%.

One of the key features small business owners are sure to enjoy is the ability to design and send emails using a pre-installed template created for WooCommerce emails in the WordPress editor.

This easy-to-appreciate feature makes it a breeze to send targeted, multi-step campaigns that include incentives for customers. AutomateWoo gives you complete control over campaigns. For example, you can schedule different emails to be sent at intervals or after specific customer interactions; you can also offer incentives using the personalized coupon system.

Also, you can track all emails via a detailed log of every email sent and conversion recorded. Furthermore, with AutomateWoo’s intelligent tracking, you can capture guest emails during checkout.

This premium plugin comes packed with a host of other features as well, including, but not limited to:

Follow-up emails: Automatically email customers who buy specific products and ask for a review or suggest other products they might likeSMS notifications: Send text messages to customers or admins for any of AutomateWoo’s wide range of triggersWishlist marketing: Send timed wishlist reminder emails and notify when a wished-for product goes on sale; integrates with WooCommerce Wishlists or YITH WishlistsPersonalized coupons: Generate dynamic customized coupons for customers to raise purchase rates

AutomateWoo will make an indispensable asset for any business looking to create better synergies between their brand’s products or services and the overall experience customers have with them.

Learn more and purchase AutomateWoo

Additional Business-Boosting Plugins

In addition to WooCommerce Bookings, WooCommerce Subscriptions, and AutomateWoo, our marketplace has also launched a number of additional premium plugins, including:

WooCommerce Points and Rewards: Allows you to reward your customers for purchases and other actions with points that can be redeemed for discountsWooCommerce One Page Checkout: Gives you the ability to create special pages for customers to select products, checkout, and pay, all in one placeWooCommerce Deposits: Customers can place a deposit or use a payment plan for products. Min/Max Quantities: Make it possible to define minimum/maximum thresholds and multiple/group amounts per product (including variations) to restrict the quantities of items that can be purchased. Product Vendors: Enables multiple vendors to sell via your site, and in return take a commission on the sales. USPS Shipping Method: Provides shipping rates from the USPS API, with the ability to accurately cover both domestic and international parcels.

Get the Most Out of Your Website

Keep an eye on the plugin marketplace, as we’re continuing to offer premium plugins that help you best serve your site visitors and customers. At WordPress.com, we’re committed to helping you achieve your goals.

To get the most out of your WordPress.com website, upgrade to WordPress Pro, which puts the power of these plugins at your fingertips. Currently, only WordPress Pro plans or legacy Business + eComm customers can purchase plugins.

Quelle: RedHat Stack