We’re excited to announce the launch of Docker Desktop 4.10. We’ve listened to your feedback, and here’s what you can expect from this release.

Easily find what you need in container logs

If you’re going through logs to find specific error codes and the requests that triggered them — or gathering all logs in a given timeframe — the process should feel frictionless. To make logs more usable, we’ve made a host of improvements to this functionality within the Docker Dashboard.

First, we’ve improved the search functionality in a few ways:

You can begin searching simply by typing Cmd + F / Ctrl + F (for Mac and Windows).

Log search results matches are now highlighted. You can use the right/left arrows or Enter / Shift + Enter to jump between matches, while still keeping previous logs and subsequent logs in view.

We’ve added regular expression search, in case you want to do things like find all errors codes in a range.

Second, we’ve also made some usability enhancements:

Smart scroll, so that you don’t have to manually disable “stick to bottom” of logs. When you’re at the bottom of the logs, we’ll automatically stick to the bottom, but the second you scroll up it’ll stick again. If you want to restore this sticky behavior, simply click the arrow in the bottom right corner.

You can now select any external links present within your logs.

Selecting something in the terminal automatically copies that selection to the clipboard.

Third, we’ve added a new feature:

You can now clear a running container’s logs, making it easy to start fresh after you’ve made a change.

Take a tour of the functionality: https://drive.google.com/file/d/12TZjYwQgKcFrIaor1rMLkQxaUfR7KELA/view?usp=sharing

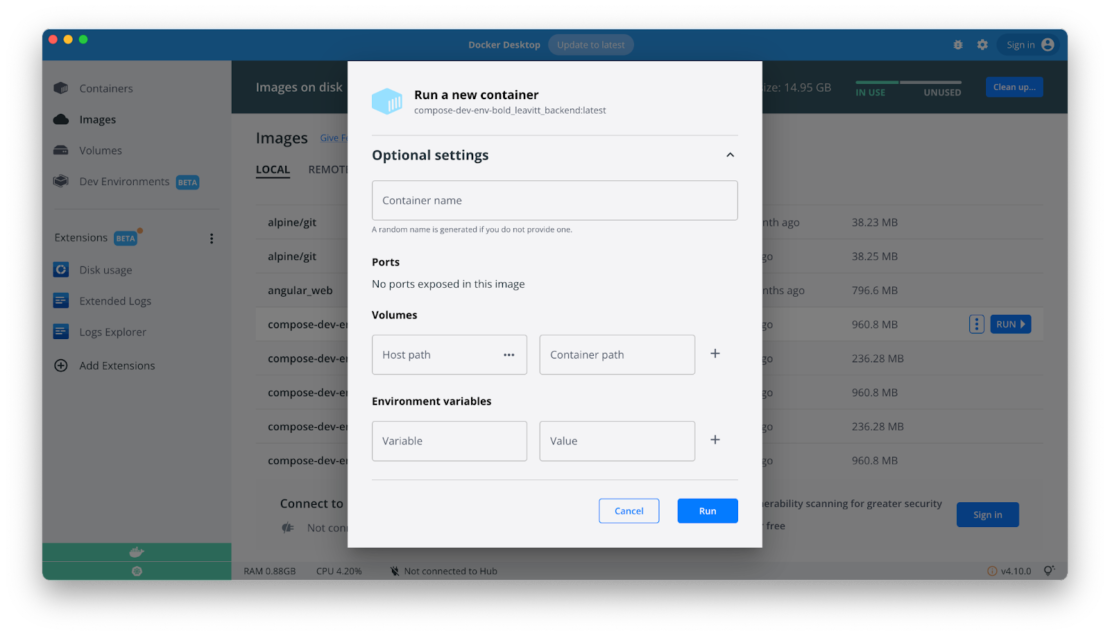

Adding Environment Variables on Image Run

Previously you could easily add environment variables while starting a container from the CLI, but you’d quickly encounter roadblocks while starting your container afterwards from the Docker Dashboard. It wasn’t possible to add these variables while running an image. Now, when running a new container from an image, you can add environment variables that immediately take effect at runtime.

We’re also looking into adding some more features that let you quickly edit environment variables in running containers. Please share your feedback or other ideas on this roadmap item.

Containers Overview: bringing back ports, container name, and status

We want to give a big thanks to everyone who left feedback on the new Containers tab. It helped highlight where our changes missed the mark, and helped us quickly address them. In 4.10, we’ve:

Made container names and image names more legible, so you can quickly identify which container you need to manage

Brought back ports on the Overview page

Restored the “container status” icon so you can easily see which ones are running.

Easy Management with Bulk Container Actions

Many people loved the addition of bulk container deletion, which lets users delete everything at once. You can now simultaneously start, stop, pause, and resume multiple containers or apps you’re working on rather than going one by one. You can start your day and every app you need in a few clicks. You also have more flexibility while pausing and resuming — since you may want to pause all containers at once, while still keeping the Docker Engine running. This lets you tackle tasks in other parts of the Dashboard.

What’s up with the Homepage?

We’ve heard your feedback! When we first rolled out the new Homepage, we wanted to make it easier and faster to run your first container. Based on community feedback, we’re updating how we deliver that Homepage content. In this release, we’ve removed the Homepage so your default starting page is once again the Containers tab.

But, don’t worry! While we rework this functionality, you can still access some of our most popular Docker Official Images while no containers are running. If you’d like to share any feedback, please leave it here.

New Extensions are Joining the Lineup

We’re happy to announce the addition of two new extensions to the Extensions Marketplace:

Ddosify – a simple, high performance, and open-source tool for load testing, written in Golang. Learn more about Ddosify here.

Lacework Scanner – enables developers to leverage Lacework Inline Scanner directly within Docker Desktop. Learn more about Lacework here.

Please help us keep improving

Your feedback and suggestions are essential to keeping us on the right track! You can upvote, comment, or submit new ideas via either our in-product links or our public roadmap. Check out our release notes to learn even more about Docker Desktop 4.10.

Looking to become a new Docker Desktop user? Visit our Get Started page to jumpstart your development journey.

Quelle: https://blog.docker.com/feed/