HDDs: Ältere Festplatten sind zuverlässiger als neue

In einer Analyse hat sich herausgestellt, dass HDDs im Schnitt zudem etwas weniger als drei Jahre durchhalten. (Festplatte, Speichermedien)

Quelle: Golem

In einer Analyse hat sich herausgestellt, dass HDDs im Schnitt zudem etwas weniger als drei Jahre durchhalten. (Festplatte, Speichermedien)

Quelle: Golem

Das Tinker V von Asus hat genug Anschlüsse und Schnittstellen, um bei Bastelprojekten oder im IoT-Bereich auszuhelfen. (Asus, IoT)

Quelle: Golem

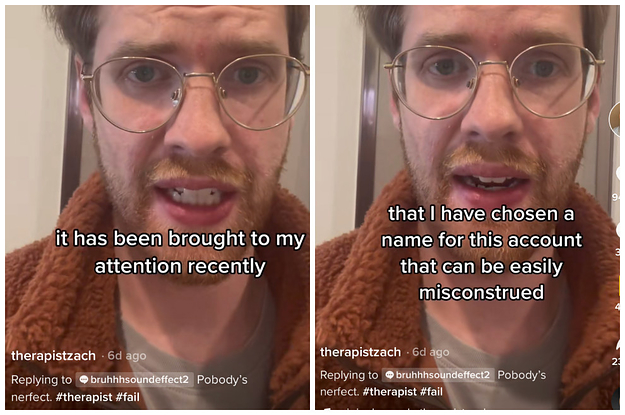

Quelle: <a href="This Therapist Accidentally Gave Himself An Unfortunate Username On TikTok“>BuzzFeed

openai.com – We’ve scaled Kubernetes clusters to 7,500 nodes, producing a scalable infrastructure for large models like GPT-3, CLIP, and DALL·E, but also for rapid small-scale iterative research such as Scaling L…

Quelle: news.kubernauts.io

One of the biggest benefits of our Business and Commerce plans is that you can add additional WordPress plugins to enhance the functionality of your website.

Below, we’re highlighting two of the newest additions to our plugin marketplace, now available at a hefty exclusive discount for WordPress.com users.

Create courses with Sensei Pro

Automattic, the same company behind WordPress.com, creates Sensei LMS. We’ve collaborated to bring our Learning Management System and course creation tools to WordPress.com sites in one seamless experience.

With Sensei Pro, you can create online courses with quizzes, certificates, cohorts, and detailed student reports. Lessons are edited just like WordPress posts or pages, so you will already know how it works.

You can enable the distraction-free Learning Mode to provide students with an optimized mobile-friendly learning environment that keeps your course content front and center.

Learn more about Sensei Pro.

Sell courses with WooCommerce integration

Selling courses can be a lucrative way to generate revenue for your blog or business. Sensei LMS integrates deeply with our WooCommerce suite of tools to make selling access to a course a breeze.

You can also take advantage of WooCommerce extensions to handle coupons, memberships, subscriptions, and more.

Learn more about WooCommerce and Sensei integration.

Publish interactive and private videos

Videos are often a big part of online courses. WordPress.com sites also come with our VideoPress service, which lets you upload videos directly to your site without dealing with a third-party tool or embed codes. Especially important for video courses, you can mark a video as “private” to ensure it won’t be downloaded or linked to, and will only be available to course students.

Even better, with Sensei’s Interactive Videos block, you can create pause points with additional content to any video. For example:

Add a quiz question in the middle of a course video.

Add a contact form to the end of a sales video.

Show links to additional resources to a blog post video.

Add Interactive Videos to any WordPress page or post. They are not limited to courses or lessons.

Learn more about Interactive Videos.

Engage with interactive blocks

In addition to the Interactive Videos, Sensei also comes with interactive and educational content blocks, including:

Quiz questions

Accordions

Image hotspots

Flashcards

Task lists

Using these blocks, you can craft compelling content that captivates and keeps your readers engaged. Whether writing an educational blog post or creating a sales landing page, you can add these blocks to any WordPress page or post.

Learn more about Interactive Blocks.

Getting Sensei Pro and Sensei Blocks

For existing sites on WordPress.com, you’ll find Sensei’s plugins in the plugin marketplace. WordPress.com users receive a 30% discount on Sensei Pro automatically.

Sensei Pro — all course creation tools and interactive blocks included. Get Sensei Pro here.

Sensei Interactive Blocks — interactive videos, accordions, image hotspots, flashcards, and task lists. Creating courses is not needed to be able to use these blocks. Get Sensei Interactive Blocks here.

Or, create a brand new site with Sensei Pro bundled here.

Quelle: RedHat Stack

Pub/Sub schemas are designed to allow safe, structured communication between publishers and subscribers. In particular, the use of schemas provides that guarantee that any message published adheres to a schema and encoding, which the subscriber can rely on when reading the data. Schemas tend to evolve over time. For example, a retailer is capturing web events and sending them to Pub/Sub for downstream analytics with BigQuery. The schema now includes additional fields that need to be propagated through Pub/Sub. Up until now Pub/Sub has not allowed the schema associated with a topic to be altered. Instead, customers had to create new topics. That limitation changes today as the Pub/Sub team is excited to introduce schema evolution, designed to allow the safe and convenient update of schemas with zero downtime for publishers or subscribers.Schema revisionsA new revision of schema can now be created by updating an existing schema. Most often, schema updates only include adding or removing optional fields, which is considered a compatible change.All the versions of the schema will be available on the schema details page. You are able to delete one or multiple schema revisions from a schema, however you cannot delete the revision if the schema has only one revision. You can also quickly compare two revisions by using the view diff functionality.Topic changesCurrently you can attach an existing schema or create a new schema to be associated with a topic so that all the published messages to the topic will be validated against the schema by Pub/Sub. With schema evolution capability, you can now update a topic to specify a range of schema revisions against which Pub/Sub will try to validate messages, starting with the last version and working towards the first version. If first-revision is not specified, any revision <= last revision is allowed, and if last revision is not specified, then any revision >= first revision is allowed.Schema evolution exampleLet’s take a look at a typical way schema evolution may be used. You have a topic T that has a schema S associated with it. Publishers publish to the topic and subscribers subscribe to a subscription on the topic:Now you wish to add a new field to the schema and you want publishers to start including that field in messages. As the topic and schema owner, you may not necessarily have control over updates to all of the subscribers nor the schedule on which they get updated. You may also not be able to update all of your publishers simultaneously to publish messages with the new schema. You want to update the schema and allow publishers and subscribers to be updated at their own pace to take advantage of the new field. With schema evolution, you can perform the following steps to ensure a zero-downtime update to add the new field:1. Create a new schema revision that adds the field.2. Ensure the new revision is included in the range of revisions accepted by the topic.3. Update publishers to publish with the new schema revision.4. Update subscribers to accept messages with the new schema revision.Steps 3 and 4 can be interchanged since all schema updates ensure backwards and forwards compatibility. Once your migration to the new schema revision is complete, you may choose to update the topic to exclude the original revision, ensuring that publishers only use the new schema.These steps work for both protocol buffer and Avro schemas. However, some extra care needs to be taken when using Avro schemas. Your subscriber likely has a version of the schema compiled into it (the “reader” schema), but messages must be parsed with the schema that was used to encode them (the “writer” schema). Avro defines the rules for translating from the writer schema to the reader schema. Pub/Sub only allows schema revisions where both the new schema and the old schema could be used as the reader or writer schema. However, you may still need to fetch the writer schema from Pub/Sub using the attributes passed in to identify the schema and then parse using both the reader and writer schema. Our documentation provides examples on the best way to do this.BigQuery subscriptionsPub/Sub schema evolution is also powerful when combined with BigQuery subscriptions, which allow you to write messages published to Pub/Sub directly to BigQuery. When using the topic schema to write data, Pub/Sub ensures that at least one of the revisions associated with the topic is compatible with the BigQuery table. If you want to update your messages to add a new field that should be written to BigQuery, you should do the following:1. Add the OPTIONAL field to the BigQuery table schema.2. Add the field to your Pub/Sub schema.3. Ensure the new revision is included in the range of revisions accepted by the topic.4. Start publishing messages with the new schema revision.With these simple steps, you can evolve the data written to BigQuery as your needs change.Quotas and limitsSchema evolution feature comes with following limits:20 revisions per schema name at any time are allowed.Each individual schema revision does not count against the maximum 10,000 schemas per project.Additional resourcesPlease check out the additional resources available at to explore this feature further:DocumentationClient librariesSamplesQuotas

Quelle: Google Cloud Platform

Microsoft Azure Data Manager for Energy is the first fully managed OSDU™ Data Platform built for the energy industry. This solution is the first step in unraveling the challenge of data—moving from disparate systems and disconnected applications to a holistic approach. The product’s ideation directly reflects the partnership between Microsoft and SLB, capitalizing on each organization’s unique expertise.

As the energy industry works to achieve a sustainable low carbon future, organizations are taking advantage of the cloud to optimize existing assets and de-risk new ventures. Universally, data is at the core of their digital transformation strategies—yet only a small fraction of energy company data is properly tagged and labeled to be searchable. This leads engineers and geoscientists to spend significant time outside of their expertise trying to discover and analyze data. Azure Data Manager for Energy customers can seamlessly connect to an open ecosystem of interoperable applications from other Independent Software Vendors (ISVs) and the Microsoft ecosystem of productivity tools. Ultimately, the open Microsoft platform enables developers, data managers, and geoscientists alike to innovate the next generation of digital solutions for the energy industry.

Enhanced data openness and liberation in Petrel

“We all benefit from making the world more open. As an industry, our shared goal is that openness in data will enable a fully liberated and connected data landscape. This is the natural next step towards data-driven workflows that integrate technologies seamlessly and leverage AI for diverse and creative solutions that take business performance to the next level.”—Trygve Randen, Director, Data & Subsurface at Digital & Integration, SLB.

The co-build partnership between Microsoft and SLB improves customers’ journey and performance, by unlocking data through interoperable applications. Delfi™ digital platform from SLB on Azure features a broad portfolio of applications, including the Petrel E&P Software Platform. The Petrel E&P Software Platform enhanced with AI enables workflows in Petrel to run with significantly faster compute times and include access to new tools, increasing the flexibility and productivity of geoscientists and engineers.

Microsoft and SLB rearchitected Petrel Data Services to allow Petrel Projects and data to be permanently stored in the customer’s instance. Petrel Data Services leverages core services found in OSDU™, such as partition and entitlement services. This change further aligns Petrel Data Services with the OSDU™ Technical Standard schemas and directly integrates with storage as the system of record. Now when geoscientists or engineers create new Petrel projects or domain data, each is liberated from Petrel into its respective Domain Data Management Service (DDMS) provided by OSDU™, like seismic or wellbore, in Azure Data Manager for Energy. These Petrel liberated projects or data become immediately discoverable in Petrel on Delfi™ Digital Platform or any third-party application developed in alignment with the emerging requirements of the OSDU™ Technical Standard such as INT’s IVAAP.

By splitting Petrel and Project Explorer software as a service (SaaS) applications from the data infrastructure, data resides in Azure Data Manager for Energy without any dependencies on an external app to access that data. Users can access and manage Petrel liberated Project Explorer and data in Azure Data Manager for Energy independent of any prerequisite application or license. Microsoft provides a secure, scalable infrastructure that governs data safely in the customer tenant while SLB focuses on delivering continuous updates to Petrel and Project Explorer on Delfi™ Digital Platform which expedites feature delivery.

Petrel and Project Explorer on Azure Data Manager for Energy

1. Search for and Discover Petrel Projects: Petrel Project Explorer shows all Petrel Project versions liberated from all users and allows the viewing of data associated with each project based on corresponding data entitlements. This includes images of the windows that are created in the project, metadata (coordinate reference systems, time zone, and more), and all data stored in the project. Using Project Explorer allows to preserve every single change throughout the lifetime of a Petrel project and preserve every critical milestone required by regulations or for historical purposes. Data and decisions can be easily shared and connected to other cloud native solutions on Delfi™ Digital Platform, and automatic, full data lineage and project versioning is always available.

2. Connect Petrel to domain data: Petrel users can consume seismic and wellbore OSDU™ domain data directly from Azure Data Manager for Energy. Furthermore, Petrel Data Services enables the development of diverse and creative solutions for the exploration and production value chain which includes liberated data consumption in other applications like Microsoft Power BI for data analytics.

3. Data liberation: Petrel Data Services empowers Petrel users to liberate Petrel Project data into Azure Data Manager for Energy where data and project content can be accessed without opening Petrel, providing simpler data overview and in-context access. Data liberation allows for direct consumption into other data analytics applications, generating new data insights into Petrel projects, breaking down data silos, and improving user and corporate data-driven workflows. It relieves users from Petrel project management and improves the focus on domain workflows for value creation.

Figure 1: Project Explorer on Azure Data Manager for Energy: View all Petrel projects within an organization in one place through an intuitive and performant User Interface (UI).

Interoperable workflows that de-risk and drive efficiency

Both traditional and new energy technical workflows are optimized when data and application interoperability are delivered. Geoscientists and engineers, therefore, want to incorporate as much diverse domain data as possible. Customers want to run more scenarios in different applications, compare results with their colleagues, and ultimately liberate the best data and the knowledge associated with it to a data platform for others to discover and consume. With Petrel and Petrel Data Services powered by Azure Data Manager for Energy, customers achieve this interoperability.

Companies can liberate wellbore and seismic data for discovery in any application developed in alignment with the emerging requirements of the OSDU™ Technical Standard. As Petrel and Petrel Data Services use the standard schemas, all data is automatically preserved and indexed for search, discovery, and consumption. This extensibility model enables geoscientists and engineers as well as data managers to seamlessly access data in their own internal applications. SLB apps on Delfi™ Digital Platform such as Techlog, as well as Microsoft productivity tools including Power BI and an extensive ecosystem of partner apps are all available in this model. Additionally, developers can refocus their efforts on innovating and building new apps—taking advantage of Microsoft Power Platform to build low-code or no-code solutions. This creates the full data-driven loop and ultimately enables integrated workflows for any interoperable apps.

Figure 2: Azure Data Manager for Energy Data Flow connects seamlessly to a broad ecosystem of interoperable applications across Delfi™, Azure Synapse, Microsoft Power Platform, and the ISV ecosystem.

Get started today

Azure Data Manager for Energy helps energy companies gain actionable insights, improve operational efficiency, and accelerate time to market on the enterprise-grade, cloud-based OSDU™ Data Platform. Visit the website to get started.

Quelle: Azure

Amazon Relational Database Service (Amazon RDS) für MariaDB unterstützt jetzt Amazon RDS Optimized Writes und ermöglicht so einen bis zu zweimal höheren Schreibdurchsatz ohne zusätzliche Kosten. Dies ist besonders nützlich für RDS für MariaDB-Kunden mit schreibintensiven Datenbank-Workloads, wie sie häufig in Anwendungen wie digitalen Zahlungen, Finanzhandel und Online-Gaming zu finden sind.

Quelle: aws.amazon.com

AWS Glue bietet jetzt eine geführte Einrichtung von Berechtigungen in der AWS-Konsole. AWS Glue ist ein Serverless-Datenintegrations- und ETL-Service, der dabei hilft, Daten für Analysen und Machine Learning (ML) zu entdecken, aufzubereiten, zu verschieben und zu integrieren. Administratoren können das neue Setup-Tool verwenden, um IAM-Rollen und Benutzern Zugriff auf AWS Glue und ihre Daten sowie eine Standardrolle für die Ausführung von Aufträgen zu gewähren.

Quelle: aws.amazon.com

Amazon Relational Database Service (Amazon RDS) für PostgreSQL unterstützt jetzt die neuesten Nebenversionen PostgreSQL 14.7, 13.10, 12.14 und 11.19. Wir empfehlen Kunden das Upgrade auf die neuesten Nebenversionen, um bekannte Sicherheitslücken in früheren PostgreSQL-Versionen zu beheben und von den von der PostgreSQL-Community hinzugefügten Fehlerbehebungen, Leistungsverbesserungen und neuen Funktionen zu profitieren. Weitere Informationen zur Veröffentlichung finden Sie in der Ankündigung der PostgreSQL-Community.

Quelle: aws.amazon.com