We’re witnessing a critical turning point in the market as AI moves from the drawing boards of innovation into the concrete realities of everyday life. The leap from potential to practical application marks a pivotal chapter, and you, as developers, are key to bringing it to bear.

The news at Build is focused on the top demands we’ve heard from all of you as we’ve worked together to turn this promise of AI into reality:

Empowering every developer to move with greater speed and efficiency, using the tools you already know and love.

Expanding and simplifying access to the AI, data—application platform services you need to be successful so you can focus on building transformational AI experiences.

And, helping you focus on what you do best—building incredible applications—with responsibility, safety, security, and reliability features, built right into the platform.

I’ve been building software products for more than two decades now, and I can honestly say there’s never been a more exciting time to be a developer. What was once a distant promise is now manifesting—and not only through the type of apps that are possible, but how you can build them.

With Microsoft Azure, we’re meeting you where you are today—and paving the way to where you’re going. So let’s jump right into some of what you’ll learn over the next few days. Welcome to Microsoft Build 2024!

Create the future with Azure AI: offering you tools, model choice, and flexibility

The number of companies turning to Azure AI continues to grow as the list of what’s possible expands. We’re helping more than 50,000 companies around the globe achieve real business impact using it—organizations like Mercedes-Benz, Unity, Vodafone, H&R Block, PwC, SWECO, and so many others.

To make it even more valuable, we continue to expand the range of models available to you and simplify the process for you to find the right models for the apps you’re building. You can learn more about all Azure AI updates we’re announcing this week over on the Tech Community blog.

Azure AI Studio, a key component of the copilot stack, is now generally available. The pro-code platform empowers responsible generative AI development, including the development of your own custom copilot applications. The seamless development approach includes a friendly user interface (UI) and code-first capabilities, including Azure Developer CLI (AZD) and AI Toolkit for VS Code, enabling developers to choose the most accessible workflow for their projects.

Developers can use Azure AI Studio to explore AI tools, orchestrate multiple interoperating APIs and models; ground models using their data using retrieval augmented generation (RAG) techniques; test and evaluate models for performance and safety; and deploy at scale and with continuous monitoring in production.

Empowering you with a broad selection of small and large language models

Our model catalog is the heart of Azure AI Studio. With more than 1,600 models available, we continue to innovate and partner broadly to bring you the best selection of frontier and open large language models (LLMs) and small language models (SLMs) so you have flexibility to compare benchmarks and select models based on what your business needs. And, we’re making it easier for you to find the best model for your use case by comparing model benchmarks, like accuracy and relevance.

I’m excited to announce OpenAI’s latest flagship model, GPT-4o, is now generally available in Azure OpenAI Service. This groundbreaking multimodal model integrates text, image, and audio processing in a single model and sets a new standard for generative and conversational AI experiences. Pricing for GPT-4o is $5/1M Tokens for input and $15/1M Tokens for output.

Earlier this month, we enabled GPT-4 Turbo with Vision through Azure OpenAI Service. With these new models developers can build apps with inputs and outputs that span across text, images, and more, for a richer user experience.

We’re announcing new models through Models-as-a-Service (MaaS) in Azure AI Studio leading Arabic language model Core42 JAIS and TimeGen-1 from Nixtla are now available in preview. Models from AI21, Bria AI, Gretel Labs, NTT DATA, Stability AI as well as Cohere Rerank are coming soon.

Phi-3: Redefining what’s possible with SLMs

At Build we’re announcing Phi-3-small, Phi-3-medium, and Phi-3-vision, a new multimodal model, in the Phi-3 family of AI small language models (SLMs), developed by Microsoft. Phi-3 models are powerful, cost-effective and optimized for resource constrained environments including on-device, edge, offline inference, and latency bound scenarios where fast response times are critical.

Introducing Phi-3: Groundbreaking performance at a small size

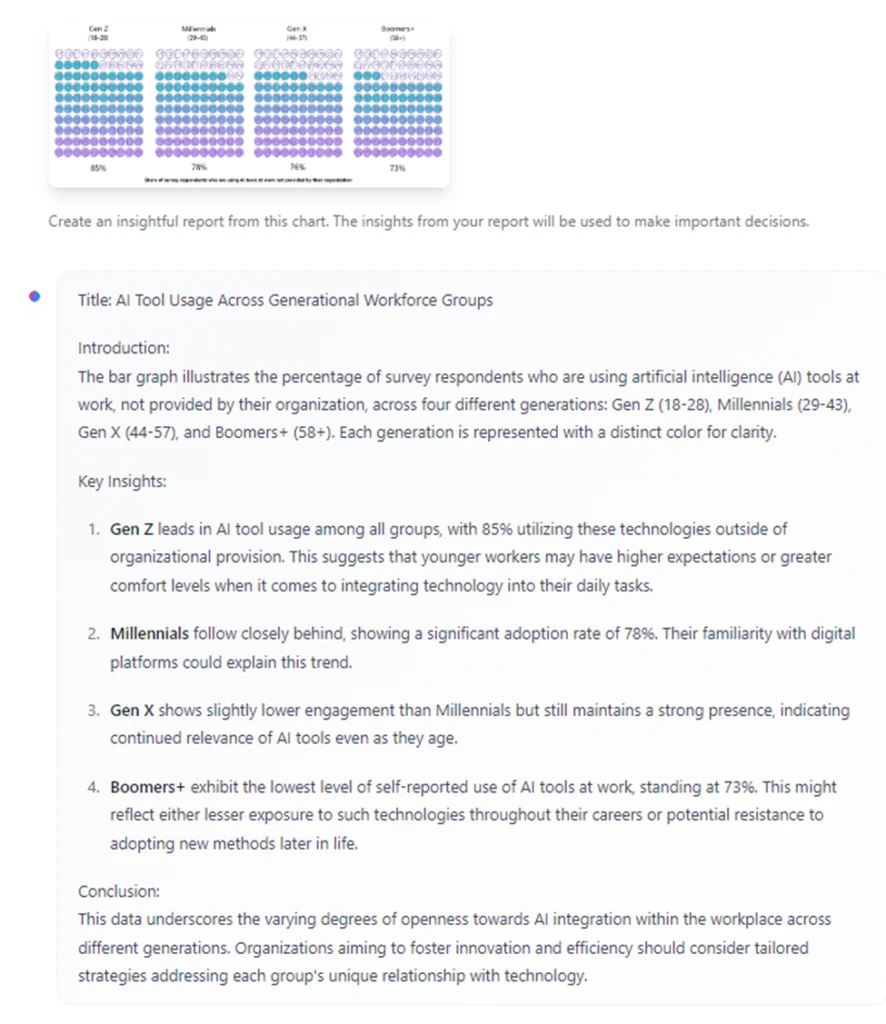

Sized at 4.2 billion parameters, Phi-3-vision supports general visual reasoning tasks and chart/graph/table reasoning. The model offers the ability to input images and text, and to output text responses. For example, users can ask questions about a chart or ask an open-ended question about specific images. Phi-3-mini and Phi-3-medium are also now generally available as part of Azure AI’s MaaS offering.

In addition to new models, we are adding new capabilities across APIs to enable multimodal experiences. Azure AI Speech has several new features in preview including Speech analytics and Video translation to help developers build high-quality, voice-enabled apps. Azure AI Search now has dramatically increased storage capacity and up to 12X increase in vector index size at no additional cost to run RAG workloads at scale.

Azure AI Studio

Get everything you need to develop generative AI applications and custom copilots in one platform

Try now

Bring your intelligent apps and ideas to life with Visual Studio, GitHub, and the Azure platform

The tools you choose to build with should make it easy to go from idea to code to production. They should adapt to where and how you work, not the other way around. We’re sharing several updates to our developer and app platforms that do just that, making it easier for all developers to build on Azure.

Access Azure services within your favorite tools for faster app development

By extending Azure services natively into the tools and environments you’re already familiar with, you can more easily build and be confident in the performance, scale, and security of your apps.

How to choose the right approach for your AI transformation

Learn more

We’re also making it incredibly easy for you to interact with Azure services from where you’re most comfortable: a favorite dev tool like VS Code, or even directly on GitHub, regardless of previous Azure experience or knowledge. Today, we’re announcing the preview of GitHub Copilot for Azure, extending GitHub Copilot to increase its usefulness for all developers. You’ll see other examples of this from Microsoft and some of the most innovative ISVs at Build, so be sure to explore our sessions.

Also in preview today is the AI Toolkit for Visual Studio Code, an extension that provides development tools and models to help developers acquire and run models, fine-tune them locally, and deploy to Azure AI Studio, all from VS Code.

Updates that make cloud native development faster and easier

.NET Aspire has arrived! This new cloud-native stack simplifies development by automating configurations and integrating resilient patterns. With .NET Aspire, you can focus more on coding and less on setup while still using your preferred tools. This stack includes a developer dashboard for enhanced observability and diagnostics right from the start for faster and more reliable app development. Explore more about the general availability of .NET Aspire on the DevBlogs post.

We’re also raising the bar on ease of use in our application platform services, introducing Azure Kubernetes Services (AKS) Automatic, the easiest managed Kubernetes experience to take AI apps to production. In preview now, AKS Automatic builds on our expertise running some of the largest and most advanced Kubernetes applications in the world, from Microsoft Teams to Bing, XBox online services, Microsoft 365 and GitHub Copilot to create best practices that automate everything from cluster set up and management to performance and security safeguards and policies.

As a developer you now have access to a self-service app platform that can move from container image to deployed app in minutes while still giving you the power of accessing the Kubernetes API. With AKS Automatic you can focus on building great code, knowing that your app will be running securely with the scale, performance and reliability it needs to support your business.

Data solutions built for the era of AI

Developers are at the forefront of a pivotal shift in application strategy which necessitates optimizations at every tier of an application—including databases—since AI apps require fast and frequent iterations to keep pace with AI model innovation.

We’re excited to unveil new data and analytics features this week designed to assist you in the critical aspects of crafting intelligent applications and empowering you to create the transformative apps of today and tomorrow.

Enabling developers to build faster with AI built into Azure databases

Vector search is core to any AI application so we’re adding native capabilities to Azure Cosmos DB with Azure Cosmos DB for NoSQL. Powered by DiskANN, a powerful algorithm library, this makes Azure Cosmos DB the first cloud database to offer lower latency vector search at cloud scale without the need to manage servers.

Azure Cosmos DB

The database for the era of AI

Learn more

We’re also announcing the availability of Azure Database for PostgreSQL extension for Azure AI to make bringing AI capabilities to data in PostgreSQL data even easier. Now generally available, this enables developers who prefer PostgreSQL to plug data directly into Azure AI for a simplified path to leverage LLMs and build rich PostgreSQL generative AI experiences.

Embeddings enable AI models to better understand relationships and similarities between data, which is key for intelligent apps. Azure Database for PostgreSQL in-database embedding generation is now in preview so embeddings can be generated right within the database—offering single-digit millisecond latency, predictable costs, and the confidence that data will remain compliant for confidential workloads.

Making developer life easier through in-database Copilot capabilities

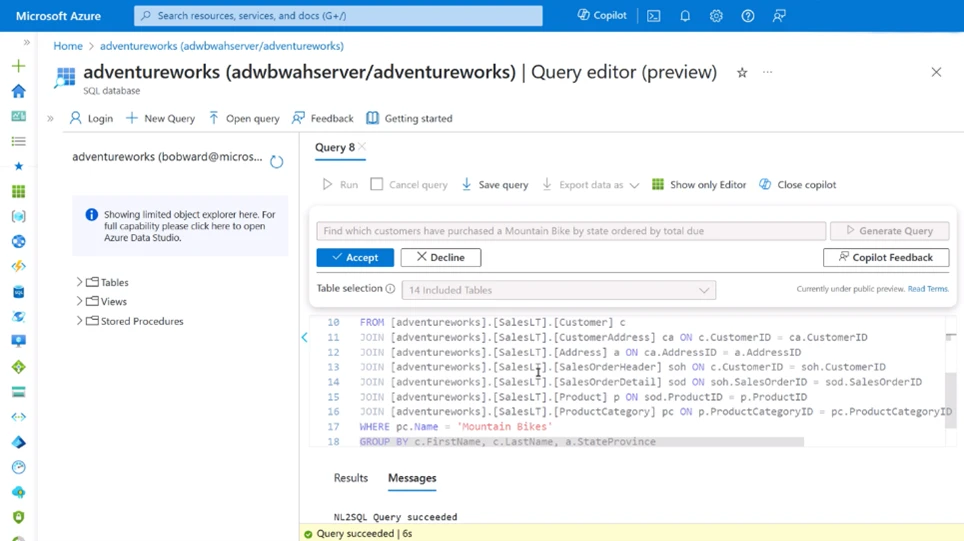

These databases are not only helping you build your own AI experiences. We’re also applying AI directly in the user experience so it’s easier than ever to explore what’s included in a database. Now in preview, Microsoft Copilot capabilities in Azure SQL DB convert queries into SQL language so developers can use natural language to interact with data. And, Copilot capabilities are coming to Azure Database for MySQL to provide summaries of technical documentation in response to user questions—creating an all-around easier and more enjoyable management experience.

Microsoft Copilot capabilities in the database user experience

Microsoft Fabric updates: Build powerful solutions securely and with ease

We have several Fabric updates this week, including the introduction of Real-Time Intelligence. This completely redesigned workload enables you to analyze, explore, and act on your data in real time. Also coming at Build: the Workload Development Kit in preview, making it even easier to design and build apps in Fabric. And our Snowflake partnership expands with support for Iceberg data format and bi-directional read and write between Snowflake and Fabric’s OneLake. Get the details and more in Arun Ulag’s blog: Fuel your business with continuous insights and generative AI. And for an overview of Fabric data security, download the Microsoft Fabric Microsoft Fabric security whitepaper.

Spend a day in the life of a piece of data and learn exactly how it moves from its database home to do more than ever before with the insights of Microsoft Fabric, real-time assistance by Microsoft Copilot, and the innovative power of Azure AI.

Build on a foundation of safe and responsible AI

What began with our principles and a firm belief that AI must be used responsibly and safely has become an integral part of the tooling, APIs, and software you use to scale AI responsibly. Within Azure AI, we have 20 Responsible AI tools with more than 90 features. And there’s more to come, starting with updates at Build.

New Azure AI Content Safety capabilities

We’re equipping you with advanced guardrails that help protect AI applications and users from harmful content and security risks and this week, we’re announcing new feature for Azure AI Content Safety. Custom Categories are coming soon so you can create custom filters for specific content filtering needs. This feature also includes a rapid option, enabling you to deploy new custom filters within an hour to protect against emerging threats and incidents.

Prompt Shields and Groundedness Detection are both available in preview now in Azure OpenAI Service and Azure AI Studio help fortify AI safety. Prompt shields mitigate both indirect and jailbreak prompt injection attacks on LLMs, while Groundedness Detection enables detection of ungrounded materials or hallucinations in generated responses.

Features to help secure and govern your apps and data

Microsoft Defender for Cloud now extends its cloud-native application protection to AI applications from code to cloud. And, AI security posture management capabilities enable security teams to discover their AI services and tools, identify vulnerabilities, and proactively remediate risks. Threat protection for AI workloads in Defender for Cloud leverages a native integration with Azure AI Content Safety to enable security teams to monitor their Azure OpenAl applications for direct and in-direct prompt injection attacks, sensitive data leaks and other threats so they can quickly investigate and respond.

With easy-to-use APIs, app developers can easily integrate Microsoft Purview into line of business apps to get industry-leading data security and compliance for custom-built AI apps. You can empower your app customers and respective end users to discover data risks in AI interactions, protect sensitive data with encryption, and govern AI activities. These capabilities are available for Copilot Studio in public preview and soon (coming in July) will be available in public preview for Azure AI Studio, and via the Purview SDK, so developers can benefit from the data security and compliance controls for their AI apps built on Azure AI. Read more here.

Two final security notes. We’re also announcing a partnership with HiddenLayer to scan open models that we onboard to the catalog, so you can verify that the models are free from malicious code and signs of tampering before you deploy them. We are the first major AI development platform to provide this type of verification to help you feel more confident in your model choice.

Second, Facial Liveness, a feature of the Azure AI Vision Face API which has been used by Windows Hello for Business for nearly a decade, is now available in preview for browser. Facial Liveness is a key element in multi-factor authentication (MFA) to prevent spoofing attacks, for example, when someone holds a picture up to the camera to thwart facial recognition systems. Developers can now easily add liveness and optional verification to web applications using Face Liveness, with the Azure AI Vision SDK, in preview.

Our belief in the safe and responsible use of AI is unwavering. You can read our recently published Responsible AI Transparency Report for a detailed look at Microsoft’s approach to developing AI responsibly. We’ll continue to deliver more innovation here and our approach will remain firmly rooted in principles and put into action with built-in features.

Move your ideas from a spark to production with Azure

Organizations are rapidly moving beyond AI ideation and into production. We see and hear fresh examples every day of how our customers are unlocking business challenges that have plagued industries for decades, jump-starting the creative process, making it easier to serve their own customers, or even securing a new competitive edge. We’re curating an industry-leading set of developer tools and AI capabilities to help you, as developers, create and deliver the transformational experiences that make this all possible.

Learn more at Microsoft Build 2024

Join us at Microsoft Build 2024 to experience the keynotes and learn more about how AI could shape your future.

Enhance your AI skills.

Discover innovative AI solutions through the Microsoft commercial marketplace.

Read more about Microsoft Fabric updates: Fuel your business with continuous insights and generative AI.

Read more about Azure Infrastructure: Unleashing innovation: How Microsoft Azure powers AI solutions.

Try Microsoft Azure for free

The post From code to production: New ways Azure helps you build transformational AI experiences appeared first on Azure Blog.

Quelle: Azure