Microsoft Cost Management updates—June 2024

Whether you’re a new student, a thriving startup, or the largest enterprise, you have financial constraints, and you need to know what you’re spending, where it’s being spent, and how to plan for the future. Nobody wants a surprise when it comes to the bill, and this is where Microsoft Cost Management comes in.

We’re always looking for ways to learn more about your challenges and how Microsoft Cost Management can help you better understand where you’re accruing costs in the cloud, identify and prevent bad spending patterns, and optimize costs to empower you to do more with less. Here are a few of the latest improvements and updates.

FOCUS 1.0 support in Exports

Cost card in Azure portal

Kubernetes cost views (New entry point)

Pricing updates on Azure.com

New ways to save money with Microsoft Cloud

Documentation updates

Before we dig into details, kudos to the FinOps foundation for successfully hosting FinOps X 2024 in San Diego, California last month. Microsoft participated as a platinum sponsor for a second consecutive year. Our team members enjoyed connecting with customers and getting insights into their FinOps practice. We also shared our vision of simplifying FinOps through AI, demonstrated in this short video—Bring your FinOps practice into the era of AI.

For all our updates from FinOps X 2024, refer to the blog post by my colleague, Michael Flanakin, who also serves in the FinOps Technical Advisory Council.

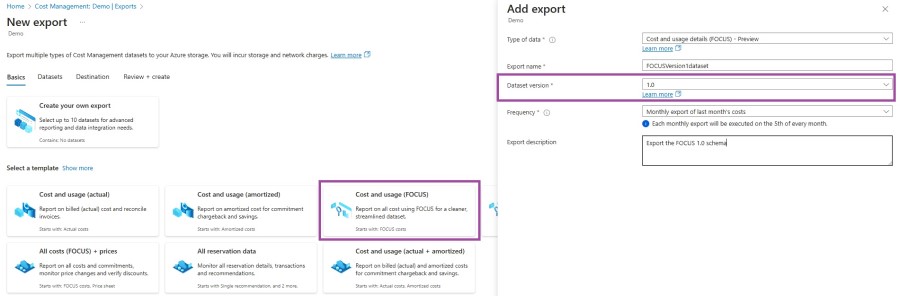

FOCUS 1.0 support in exports

As you may already know, the FinOps foundation announced the general availability of the FinOps Cost and Usage Specification (FOCUS) Version 1 in June 2024. We are thrilled to announce that you can get the newly released version through exports experience in the Microsoft Azure portal or the REST API. You can review the updated schema and the differences from the previous version in this Microsoft Learn article. We will continue to support the ability for you to export the preview version of the FOCUS dataset.

For all the datasets supported through exports and to learn more about the functionality, refer to our documentation.

Cost card in Azure portal

You have always had the ability to estimate costs for Azure services using the pricing calculator so that you can better plan your expenses. Now, we are excited to announce the estimation capability within the Azure portal itself. Engineers now can quickly get a breakdown of their estimated virtual machine (VM) costs before deploying them and adjust as needed. This new experience is currently available only for VMs running on pay-as-you-go subscriptions and will be expanded in the future. Empowering engineers with cost data without disrupting their workflow enables them to make the right decisions for managing their spending and drives accountability.

Kubernetes cost views (new entry point)

I had spoken about the Azure Kubernetes Service cost views in our November 2023 blog post. We know how important it is for you to get visibility into the granular costs of running your clusters. To make it even easier to access these cost views, we have added an entry point to the cluster page itself. Engineers and admins who are already on the cluster page potentially making configuration changes or just monitoring their cluster, can now quickly reference the costs as well.

Pricing updates on Azure.com

We’ve been working hard to make some changes to our Azure pricing experiences, and we’re excited to share them with you. These changes will help make it easier for you to estimate the costs of your solutions.

We’ve expanded our global reach with pricing support for new Azure regions, including Spain Central and Mexico Central.

We’ve introduced pricing for several new services—enhancing our Azure portfolio—including Trusted Signing, Azure Advanced Container Networking Services, Azure AI Studio, Microsoft Entra External ID, and Azure API Center (now available on the Azure API Management pricing calculator.)

The Azure pricing calculator now supports a new example to help you get started with estimating costs for your Azure Arc enabled servers scenarios.

Azure AI has seen significant updates with pricing support for Basic Video Indexing Analysis for Azure AI Video Indexer, new GPT-4o models and improved Fine Tuning models for Azure OpenAI Service, the deprecation of S2 to S4 volume discount tiers for Azure AI Translator, and the introduction of standard fast transcription and video dubbing, both in preview, for Azure AI Speech.

We’re thrilled to announce new features in both preview and general availability stages with Azure flex consumption (preview) for Azure Functions, Advanced messaging (generally available) for Azure Communication Services, and Azure API Center (generally available) for Azure API Management, and AKS Automatic (preview) for Azure Kubernetes.

We’ve made comprehensive updates to our pricing models to reflect the latest offerings and ensure you have the most accurate information, including changes to

Azure Bastion: Added pricing for premium and developer stock-keeping units (SKUs).

Virtual Machines: Removal of CentOS for Linux, added 5 year reserved instances (RI) pricing for the Hx and HBv4 series, as well as pricing for the new NDsr H100 v5 and E20 v4 series.

Databricks: Added pricing for all-purpose serverless compute jobs.

Azure Communication Gateway: Added pricing for the new “Lab” SKU.

Azure Virtual Desktop for Azure Stack HCI: Pricing added to the Azure Virtual Desktop calculator.

Azure Data Factory: Added RI pricing for Dataflow.

Azure Container Apps: Added pricing for dynamic session feature.

Azure Backup: Added pricing for the new comprehensive Blob Storage data protection feature.

Azure SQL Database: Added 3 year RI pricing for hyperscale series, zone redundancy pricing for hyperscale elastic pools, and disaster recovery pricing options for single database.

Azure PostgreSQL: Added pricing for Premium SSD v2.

Defender for Cloud: Added pricing for the “Pre-Purchase Plan”.

Azure Stack Hub: Added pricing for site recovery.

Azure Monitor: Added pricing for pricing for workspace replication as well as data restore in the pricing calculator.

We’re constantly working to improve our pricing tools and make them more accessible and user-friendly. We hope you find these changes helpful in estimating the costs for your Azure solutions. If you have any feedback or suggestions for future improvements, please let us know!

New ways to save money in the Microsoft Cloud

VM Hibernation is now generally available

Documentation updates

Here are a few documentation updates you might be interested in:

Update: Understand Cost Management data

Update: Azure Hybrid Benefit documentation

Update: Automation for partners

Update: View and download your Microsoft Azure invoice

Update: Tutorial: Create and manage exported data

Update: Automatically renew reservations

Update: Changes to the Azure reservation exchange policy

Update: Migrate from EA Marketplace Store Charge API

Update: Azure product transfer hub

Update: Get started with your Microsoft Partner Agreement billing account

Update: Manage billing across multiple tenants using associated billing tenants

Want to keep an eye on all documentation updates? Check out the Cost Management and Billing documentation change history in the azure-docs repository on GitHub. If you see something missing, select Edit at the top of the document and submit a quick pull request. You can also submit a GitHub issue. We welcome and appreciate all contributions!

What’s next?

These are just a few of the big updates from last month. Don’t forget to check out the previous Microsoft Cost Management updates. We’re always listening and making constant improvements based on your feedback, so please keep the feedback coming.

Follow @MSCostMgmt on X and subscribe to the Microsoft Cost Management YouTube channel for updates, tips, and tricks.

The post Microsoft Cost Management updates—June 2024 appeared first on Azure Blog.

Quelle: Azure