By Dan Ciruli, Product Manager

The shift from on-premises to cloud computing is rarely sudden and rarely complete. Workloads move over time; in some cases new workloads get built in the cloud and old workloads stay on-premises. In other cases, organizations lift and shift some services and continue to do new developments on their own infrastructure. And, of course, many companies have deployments in multiple clouds.

When you run services across a wide array of resources and locations, you need to secure communications between them. Networking may be able to solve some issues, but it can be difficult in many cases: if you’re running containerized workloads on hardware that belongs to three different vendors, good luck setting up a VPN to protect that traffic.

Increasingly, our customers use Google Cloud Endpoints to authenticate and authorize calls to APIs rather than (or even in addition to) trying to secure them through networking. In fact, providing more security for calls across a hybrid environment was one of the original use cases for Cloud Endpoints adopters.

“When migrating our workloads to Google Cloud Platform, we needed to more securely communicate between multiple data centers. Traditional methods like firewalls and ad hoc authentication were unsustainable, quickly leading to a jumbled mess of ACLs. Cloud Endpoints, on the other hand, gives us a standardized authentication system.”

— Laurie Clark-Michalek, Infrastructure Engineer, Qubit

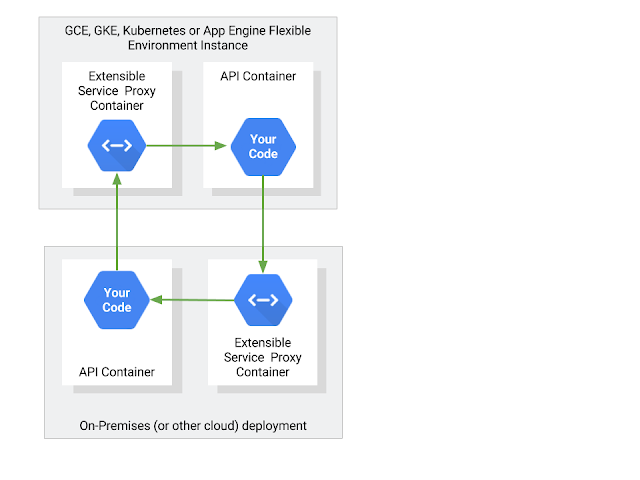

Cloud Endpoints uses the Extensible Service Proxy, based on NGINX, which can validate a variety of authentication schemes from JWT tokens to API keys. We deploy that open source proxy automatically if you use Cloud Endpoints on App Engine Flexible environment, but it is also available via the Google Container Registry for deployment anywhere: on Google Container Engine, on-premises, or even in another cloud.

Protecting APIs with JSON Web Tokens

One of the most common and more secure ways to protect your APIs is to require a JSON Web Token (JWT). Typically, you use a service account to represent each of your services, and each service account has a private key that can be used to sign a JSON Web Token.

If your (calling) service runs on GCP, we manage the key for you automatically; simply invoke the IAM.signJwt method on your JSON web token and put the resulting signed JWT in the OAuth Authorization: Bearer header on your call.

If your service runs on-premises, install ESP as a sidecar that proxies all traffic to your service. Your API configuration tells ESP which service account will be placing the calls. ESP uses the public key for your service account to validate that it was signed properly, and validates several fields in the JWT as well.

If the service is on-premises and calling to the cloud, you still need to sign your JWT, but it’s your responsibility to manage the private key. In that case, download the private key from Cloud Console (following best practices to help securely store it) and sign your JWTs.

For more details, check out the sample code and documentation on service-to-service authentication (or this, if you’re using gRPC).

Securing APIs with API keys

Strictly speaking, API keys are not authentication tokens. They’re longer-lived and more dangerous if stolen. However, they do provide a quick and easy way to protect an API by easily adding them to a call — either in a header or as a query parameter.

API keys also allow an API’s consumers to generate their own credentials. If you’ve ever called a Google API that doesn’t involve personal data, for example the Google Maps Javascript API, you’ve used an API key.

To restrict access to an API with an API key, follow these directions. After that, you’ll need to generate a key. You can generate the key in that same project (following these directions). Or you can share your project with another developer. Then, in the project that will call your API, that developer can create an API key and enable the API. Add the key to the API calls as a query parameter (just add ?key=${ENDPOINTS_KEY} to your request) or in the x-api-key header (see the documentation for details).

Wrapping up

Securing APIs is good practice no matter where they run. At Google, we use authentication for inter-service communication, even if both run entirely on our production network. But if you live in a hybrid cloud world, authenticating each and every call is even more important.

To get started with Cloud Endpoints, take a look at our tutorials. It’s a great way to build scalable and more secure applications that can span a variety of cloud and on-premises environments.

Quelle: Google Cloud Platform

Published by