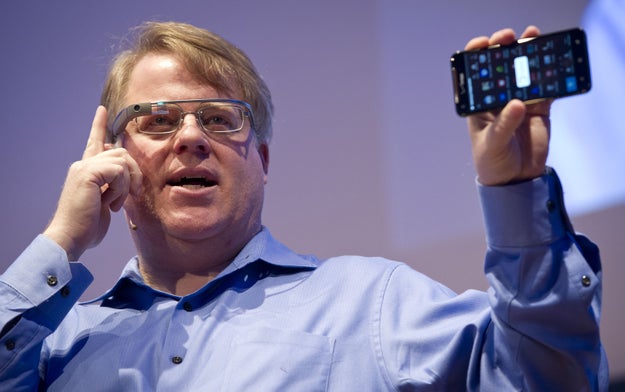

Photographed for BuzzFeed News

KASHGAR, China — This is a city where growing a beard can get you reported to the police. So can inviting too many people to your wedding, or naming your child Muhammad or Medina.

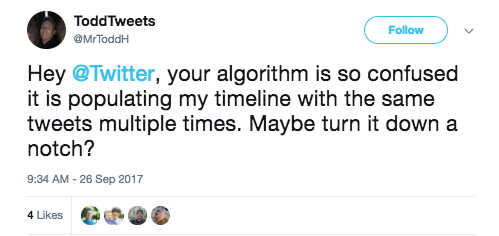

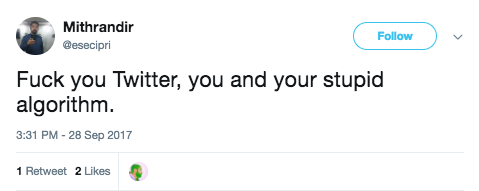

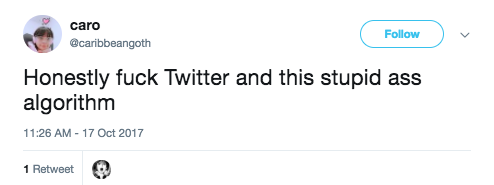

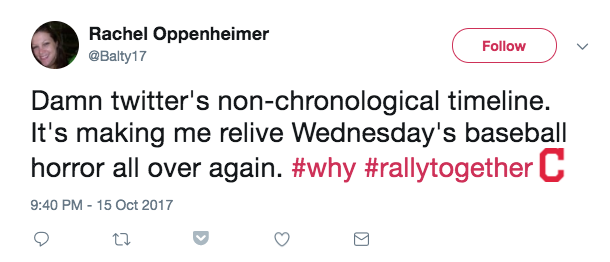

Driving or taking a bus to a neighboring town, you’d hit checkpoints where armed police officers might search your phone for banned apps like Facebook or Twitter, and scroll through your text messages to see if you had used any religious language.

You would be particularly worried about making phone calls to friends and family abroad. Hours later, you might find police officers knocking at your door and asking questions that make you suspect they were listening in the whole time.

For millions of people in China’s remote far west, this dystopian future is already here. China, which has already deployed the world’s most sophisticated internet censorship system, is building a surveillance state in Xinjiang, a four-hour flight from Beijing, that uses both the newest technology and human policing to keep tabs on every aspect of citizens’ daily lives. The region is home to a Muslim ethnic minority called the Uighurs, who China has blamed for forming separatist groups and fueling terrorism. Since this spring, thousands of Uighurs and other ethnic minorities have disappeared into so-called political education centers, apparently for offenses from using western social media apps to studying abroad in Muslim countries, according to relatives of those detained.

Over the past two months, I interviewed more than two dozen Uighurs, including recent exiles and those who are still in Xinjiang, about what it’s like to live there. The majority declined to be named because they were afraid that police would detain or arrest their families if their names appeared in the press.

Taken along with government and corporate records, their accounts paint a picture of a regime that at once recalls the paranoia of the Mao era and is also thoroughly modern, marrying heavy-handed hthuman policing of any behavior outside the norm with high-tech tools like iris recognition and apps that eavesdrop on cell phones.

China’s government says the security measures are necessary in Xinjiang because of the threat of extremist violence by Uighur militants — the region has seen periodic bouts of unrest, from riots in 2009 that left almost 200 dead to a series of deadly knife and bomb attacks in 2013 and 2014. The government also says it’s made life for Uighurs better, pointing to the money it’s poured into economic development in the region, as well as programs making it easier for Uighurs to attend university and obtain government jobs. Public security and propaganda authorities in Xinjiang did not respond to requests for comment. China’s foreign ministry said it had no knowledge of surveillance measures put in place by the local government.

“I want to stress that people in Xinjiang enjoy a happy and peaceful working and living situation,” said Lu Kang, a spokesperson for China’s foreign ministry, when asked why the surveillance measures are needed. “We have never heard about these measures taken by local authorities.”

But analysts and rights groups say the heavy-handed restrictions punish all of the region’s 9 million Uighurs — who make up a bit under half of the region’s total population — for the actions of a handful of people. The curbs themselves fuel resentment and breed extremism, they say.

The ubiquity of government surveillance in Xinjiang affects the most prosaic aspects of daily life, those interviewed for this story said. D., a stylish young Uighur woman in Turkey, said that even keeping in touch with her grandmother, who lives in a small Xinjiang village, had become impossible.

Whenever D. called her grandmother, police would barge in hours later, demanding the elderly woman phone D. back while they were in the room.

“For god’s sake I’m not going to talk to my 85-year-old grandmother about how to destroy China!” D. said, exasperated, sitting across the table from me in a café around the corner from her office.

After she got engaged, D. invited her extended family, who live in Xinjiang, to her wedding. Because it is now nearly impossible for Uighurs to obtain passports, D. ended up postponing the ceremony for months in hopes the situation would improve.

Finally, in May, she and her mother had a video call with her family on WeChat, the popular Chinese messaging platform. When D. asked how they were, they said everything was fine. Then one of her relatives, afraid of police eavesdropping, held up a handwritten sign that said, “We could not get the passports.”

D. felt her heart sink, but she just nodded and kept talking. As soon as the call ended, she said, she burst into tears.

“Don’t misunderstand me, I don’t support suicide bombers or anyone who attacks innocent people,” she said. “But in that moment, I told my mother I could understand them. I was so pissed off that I could understand how those people could feel that way.”

China’s government has invested billions of renminbi into top-of-the-line surveillance technology for Xinjiang, from facial recognition cameras at petrol stations to surveillance drones that patrol the border.

A surveillance video from SenseTime Group, which works on using artificial intelligence and deep learning in face, body, and behavioral recognition. The video, which circulated widely on social media, could not be independently verified by BuzzFeed News.

youtube.com

China is not alone in this — governments from the United States to Britain have poured funds into security technology and know-how to combat threats from terrorists. But in China, where Communist Party–controlled courts convict 99.9% of the accused and arbitrary detention is a common practice, digital and physical spying on Xinjiang’s populace has resulted in disastrous consequences for Uighurs and other ethnic minorities. Many have been jailed after they advocated for more rights or extolled Uighur culture and history, including the prominent scholar Ilham Tohti.

China has gradually increased restrictions in Xinjiang for the past decade in response to unrest and violent attacks, but the surveillance has been drastically stepped up since the appointment of a new party boss to the region in August 2016. Chen Quanguo, the party secretary, brought “grid-style social management” to Xinjiang, placing police and paramilitary troops every few hundred feet and establishing thousands of “convenience police stations.” The use of political education centers — where thousands have been detained this year without charge — also radically increased after his tenure began. Spending on domestic security in Xinjiang rose 45% in the first half of this year, compared to the same period a year earlier, according to an analysis of Chinese budget figures by researcher Adrian Zenz of the European School of Culture and Theology in Germany. A portion of that money has been poured into dispatching tens of thousands of police officers to patrol the streets.

In an August speech, Meng Jianzhu, China’s top domestic security official, called for the use of a DNA database and “big data” in keeping Xinjiang secure.

It’s a corner of the country that has become a window into the possible dystopian future of surveillance technology, wielded by states like China that have both the capital and the political will to monitor — and repress — minority groups. The situation in Xinjiang could be a harbinger for draconian surveillance measures rolled out in the rest of the country, analysts say.

“It’s an open prison,” said Omer Kanat, director of the Washington-based Uyghur Human Rights Project, an advocacy group that conducts research on life for Uighurs in Xinjiang. “The Cultural Revolution has returned [to the region], and the government doesn’t try to hide anything. It’s all in the open.”

A blacksmith works under watch of a police guard in Kashgar.

Photographed for BuzzFeed News

Once an oasis town on the ancient Silk Road, Kashgar is the cultural heart of the Uighur community. On a sleepy tree-lined street in the northern part of the city, among noodle shops and bakeries, stands an imposing compound surrounded by high concrete walls topped with loops of barbed wire. The walls are papered with colorful posters bearing slogans like “cherish ethnic unity as you cherish your own eyes” and “love the party, love the country.”

The compound is called the Kashgar Professional Skills Education and Training Center, according to a sign posted outside its gates. When I took a cell phone photo of the sign in September, a police officer ran out of the small station by the gate and demanded I delete it.

“What kind of things do they teach in there?” I asked.

“I’m not clear on that. Just delete your photo,” he replied.

“People disappear inside that place.”

Before this year, the compound was a school. But according to three people with friends and relatives held there, it is now a political education center — one of hundreds of new facilities where Uighurs are held, frequently for months at a time, to study the Chinese language, Chinese laws on Islam and political activity, and all the ways the Chinese government is good to its people.

“People disappear inside that place,” said the owner of a business in the area. “So many people — many of my friends.”

He hadn’t heard from them since, he said, and even their families cannot reach them. Since this spring, thousands of Uighurs and other minorities have been detained in compounds like this one. Though the centers aren't new, their purpose has been significantly expanded in Xinjiang over the last few months.

Through the gaps in the gates, I could see a yard decorated with a white statue in the Soviet-era socialist realist style, a red banner bearing a slogan, and another small police station. The beige building inside had shades over each of its windows.

A propaganda poster on the walls of a political education compound in Kashgar reads, “Cherish ethnic unity as you cherish your own eyes.”

Photographed for BuzzFeed News

Chinese state media has acknowledged the existence of the centers, and often boasts of the benefits they confer on the Uighur populace. In an interview with the state-owned Xinjiang Daily, a 34-year-old Uighur farmer, described as an “impressive student,” says he never realized until receiving political education that his behavior and style of dress could be manifestations of “religious extremism.”

Detention for political education of this kind is not considered a form of criminal punishment in China, so no formal charges or sentences are given to people sent there, or to their families. So it’s hard to say exactly what transgressions prompt authorities to send people to the centers. Anecdotal reports suggest that having a relative who has been convicted of a crime, having the wrong content on your cell phone, and appearing too religious could all be causes.

It’s clear, though, that having traveled abroad to a Muslim country, or having a relative who has traveled abroad, puts people at risk of detention. And the ubiquity of digital surveillance makes it nearly impossible to contact relatives abroad, according to the Uighurs I interviewed.

One recent exile reported that his wife, who remained in Xinjiang with their young daughter, asked for a divorce so that police would stop questioning her about his activities.

“It’s too dangerous to call home,” said another Uighur exile in the Turkish capital, Ankara. “I used to call my classmates and relatives. But then the police visited them, and the next time, they said, ‘Please don’t call anymore.’”

R., a Uighur student just out of undergrad, discovered he had a knack for Russian language in college. He was dying to study abroad. Because of the new rules imposed last year that made it nearly impossible for Uighurs to obtain passports, the family scraped together about 10,000 RMB ($1500) to bribe an official and get one, R. said.

R. made it to a city in Turkey, where he started learning Turkish and immersed himself in the culture, which has many similarities to Uighur customs and traditions. But he missed his family and the cotton farm they run in southern Xinjiang. Still, he tried to avoid calling home too much so he wouldn’t cause them trouble.

“She would never talk like that. It felt like a police officer was standing next to her.”

“In the countryside, if you get even one call from abroad, they will know. It’s obvious,” said R., who agreed to meet me in the back of a trusted restaurant only after all the other patrons had gone home for the night. He was so nervous as he spoke that he couldn’t touch the lamb-stuffed pastries on his plate.

In March, R. told me, he found out that his mother had disappeared into a political education center. His father was running the farm alone, and no one in the family could reach her. R. felt desperate.

Two months later, he finally heard from his mother. In a clipped phone call, she told him how grateful she was to the Chinese Communist Party, and how good she felt about the government.

“I know she didn’t want to say it. She would never talk like that,” R. said. “It felt like a police officer was standing next to her.”

Since that call, his parents’ phones have been turned off. He hasn’t heard from them since May.

A Uighur man stares at a police station from a balcony in Kashgar.

Photographed for BuzzFeed News

Security has become a big business opportunity for hundreds of companies, mostly Chinese, seeking to profit from the demand for surveillance equipment in Xinjiang.

Researchers have found that China is pouring money into its budget for surveillance. Zenz, who has closely watched Xinjiang’s government spending on security personnel and systems, said its investment in information technology transfer, computer services, and software will quintuple this year from 2013. The growth in the security industry there reflects the state-backed surveillance boom, he said.

He noted that a budget line item for creating a “shared information platform” appeared for the first time this year. The government has also hired tens of thousands more security personnel.

Armed police, paramilitary forces, and volunteer brigades stand on every street in Kashgar, stopping pedestrians at random to check their identifications, and sometimes their cell phones, for banned apps like WhatsApp as well as VPNs and messages with religious or political content.

Other equipment, like high-resolution cameras and facial recognition technology, is ubiquitous. In some parts of the region, Uighurs have been made to download an app to their phones that monitors their messages. Called Jingwang, or “web cleansing,” the app works to monitor “illegal religious” content and “harmful information,” according to news reports.

Quelle: <a href="This Is What A 21st-Century Police State Really Looks Like“>BuzzFeed