The Big Tech Platforms Still Suck During Breaking News

In aftermath of Sunday evening’s mass shooting in Las Vegas, visitors to Facebook’s Crisis Response page for the tragedy should have found a cascading feed of community-posted news and information intended to “help people be more informed about a crisis.” Instead, they discovered an algorithmic nightmare — a hodgepodge of randomly surfaced, highly suspect articles from spammy link aggregators and sites like The Gateway Pundit, which has a history of publishing false information. Indeed, at one point Monday morning, the top three news articles on Facebook's Las Vegas Shooting Crisis Response page directed readers to hyperpartisan news or advertising-clogged blog sites trying to profit from the tragedy.

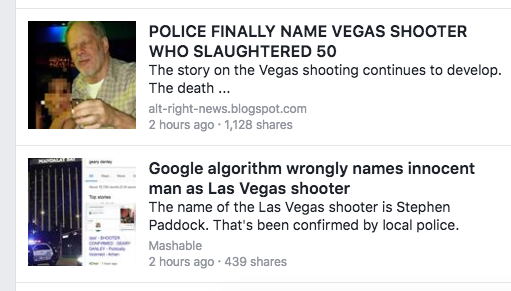

And early Monday morning, Google search queries for “Geary Danley” — a man initially (and falsely) identified as a victim of the shooting — were served Google News links to the notorious message board 4chan, which was openly working to propagate hoaxes that might politicize the tragedy.

Facebook and Google are hardly alone. Twitter — the internet’s beating heart for news — is continually under siege by trolls, automated accounts, and politically motivated fake news peddlers. In the aftermath of Sunday night’s shooting, a number of pro-Trump accounts of unknown origin tried to link the gunman to leftist groups in an apparent attempt to politicize the tragedy and sow divisions. Others hinted at false flags and crisis actors, suggesting that there was a greater conspiracy behind the shooting (Twitter told BuzzFeed News it has since suspended many of these accounts).

And on sites like YouTube, unverified information flowed largely unchecked, with accounts like The End Times News Report impersonating legitimate news sources to circulate conspiracy and rumor.

A few hours later, Facebook and Google issued statements apologizing for promoting such misinformation. “We are working to fix the issue that allowed this to happen in the first place and deeply regret the confusion this caused,” a Facebook spokesperson told CNN. Google issued a similarly pat explanation for featuring a 4Chan troll thread as a “Top Story” inside Google search results for Danley: “This should not have appeared for any queries, and we’ll continue to make algorithmic improvements to prevent this from happening in the future.”

But neither apology acknowledged the darker truth: that despite the corrections, the damage had already been done, misinformation unknowingly shared with thousands via apparently reckless curators. One link surfaced in Facebook’s Crisis Response page from the website alt-right-news.blogspot.com has been shared across Facebook roughly 1,300 times as of this writing, according to the analytics site BuzzSumo. The article’s third paragraph hints at the shooter’s political leanings, but offers no evidence in support of that claim. “This sounds more like the kind of target a Left-wing nutjob would choose than a Right-wing nutjob,” it reads, before going on to spread unconfirmed information about other (since dismissed) suspects in the shooting. A link from the hyperpartisan site, The Gateway Pundit (the same site which misidentified the shooter earlier in the day) as was shared roughly 10,800 times across Facebook, according to BuzzSumo.

This is just the latest example of platforms who've pledged to provide accurate information failing miserably to do so. Despite their endless assurances and apologies and promises to do better, misinformation continues to slip past. When it comes to breaking news, platforms like Facebook and Google tout themselves as willing, competent gatekeepers. But it’s clear they’re simply not up to the task.

Facebook hopes to become a top destination for breaking news, but in pivotal moments it often seems to betray that intention with an ill-conceived product design or a fraught strategic decision. In 2014, it struggled to highlight news about the shooting of Michael Brown and the ensuing Ferguson protests. News coverage of the events went largely unnoticed on the network while instead, News Feeds were jammed with algorithmically pleasing Ice Bucket Challenge videos. And during the 2016 US presidential election, it failed to moderate the fake news, propaganda, and Russian-purchased advertising for which it is now under congressional scrutiny. Meanwhile, it has made no substantive disclosures about the inner workings of its platform.

Google has had its fair share of stumbles around news curation as well, particularly in 2016. Shortly after the US presidential election, Google’s top news hits for the final 2016 election results included a fake news site claiming that Donald Trump won both the popular and electoral votes (he did not win the popular vote). Less than a month later, the company came under fire again for surfacing a Holocaust denier and white supremacist webpage as the top results for the query “The Holocaust.”

This year alone, almost every major social network has made a full-throated commitment to rid its platform of misinformation and polarizing content, as well as those who spread it. Google and Facebook have both pledged to eradicate fake news from their ad platforms, cutting off a key revenue stream for those who peddle misinformation. And Google has told news organizations that it has updated its algorithms to better prioritize “authoritative” content and allow users to flag fake news. YouTube has pledged to cut the reach of accounts “that contain inflammatory religious or supremacist content” and Twitter continues to insist that it is making progress on harassment and trolls on its network.

Big Tech’s breaking news problem is an issue of scale — the networks are so vast that they must be policed largely by algorithm — but it's also one of priorities. Platforms like Facebook and Google are businesses driven by an insatiable need to engage and add users and monetize them. Balancing a business mandate like that with issues of free speech and the protection of civil discourse is no easy matter. Curating news seems an almost prosaic task in comparison. And in many ways, it’s antithetical to the nature of platforms like Facebook or YouTube. News is often painful, unpopular, or unwelcome, and that doesn't always align well with algorithmic mechanisms designed to give us what we want.

And though their words may suggest an unwavering commitment to delivering reliable breaking news, the platforms’ actions frequently undermine those ambitions. Sometimes the companies make these priorities public, like in June 2016 when Facebook announced that it would tweak its News Feed algorithm away from professional news organizations and publishers to show more stories from friends and family members. But other priorities are expressed through engineering decisions made behind closed doors.

Given the massive scale of platforms like Google and Facebook, it’s impossible to expect the platforms to catch everything. But as the Vegas tragedy proved, many of the platform’s slip-ups are simple, egregious oversights. Google, for example, claims the 4chan story appeared in its “Top Stories” widget because it was one of the few pages mentioning “Geary Danley” when today's news broke and it was seeing a lot of traffic. But why didn't Google have guardrails in place to prevent this from happening? 4chan has been a deeply unreliable and toxic news portal for years — why didn't Google have protocols in place to stop the site from appearing in news results? Why treat 4chan as a news source at all?

The same goes for Facebook. The company can't be expected to stop every single scrap of fake news. But in the case of its Crisis Response pages, why not curate news from verified and vetted outlets to ensure that those looking for answers and loved ones in a crisis aren't led astray? These are seemingly simple questions for which the platforms rarely have good answers.

The platforms always promise to do better. Why can't they?

Lam Vo contributed reporting for this piece.

Quelle: <a href="The Big Tech Platforms Still Suck During Breaking News“>BuzzFeed