Azure networking updates on security, reliability, and high availability

Enabling the next wave of cloud transformation with Azure Networking

The cloud landscape is evolving at an unprecedented pace, driven by the exponential growth of AI workloads and the need for seamless, secure, and high-performance connectivity. Azure Network services stand at the forefront of this transformation, delivering the hyperscale infrastructure, intelligent services, and resilient architecture that empower organizations to innovate and scale with confidence.

Get the latest Azure Network services updates here

Azure’s global network is purpose-built to meet the demands of modern AI and cloud applications. With over 60 AI regions, 500,000+ miles of fiber, and more than 4 petabits per second (Pbps) of WAN capacity, Azure’s backbone is engineered for massive scale and reliability. The network has tripled its overall capacity since the end of FY24, now reaching 18 Pbps, ensuring that customers can run the most demanding AI and data workloads with uncompromising performance.

In this blog, I am excited to share about our advancements in data center networking that provides the core infrastructure to run AI training models at massive scale, as well as our latest product announcements to strengthen the resilience, security, scale, and the capabilities needed to run cloud native workloads for optimized performance and cost.

AI at the heart of the cloud

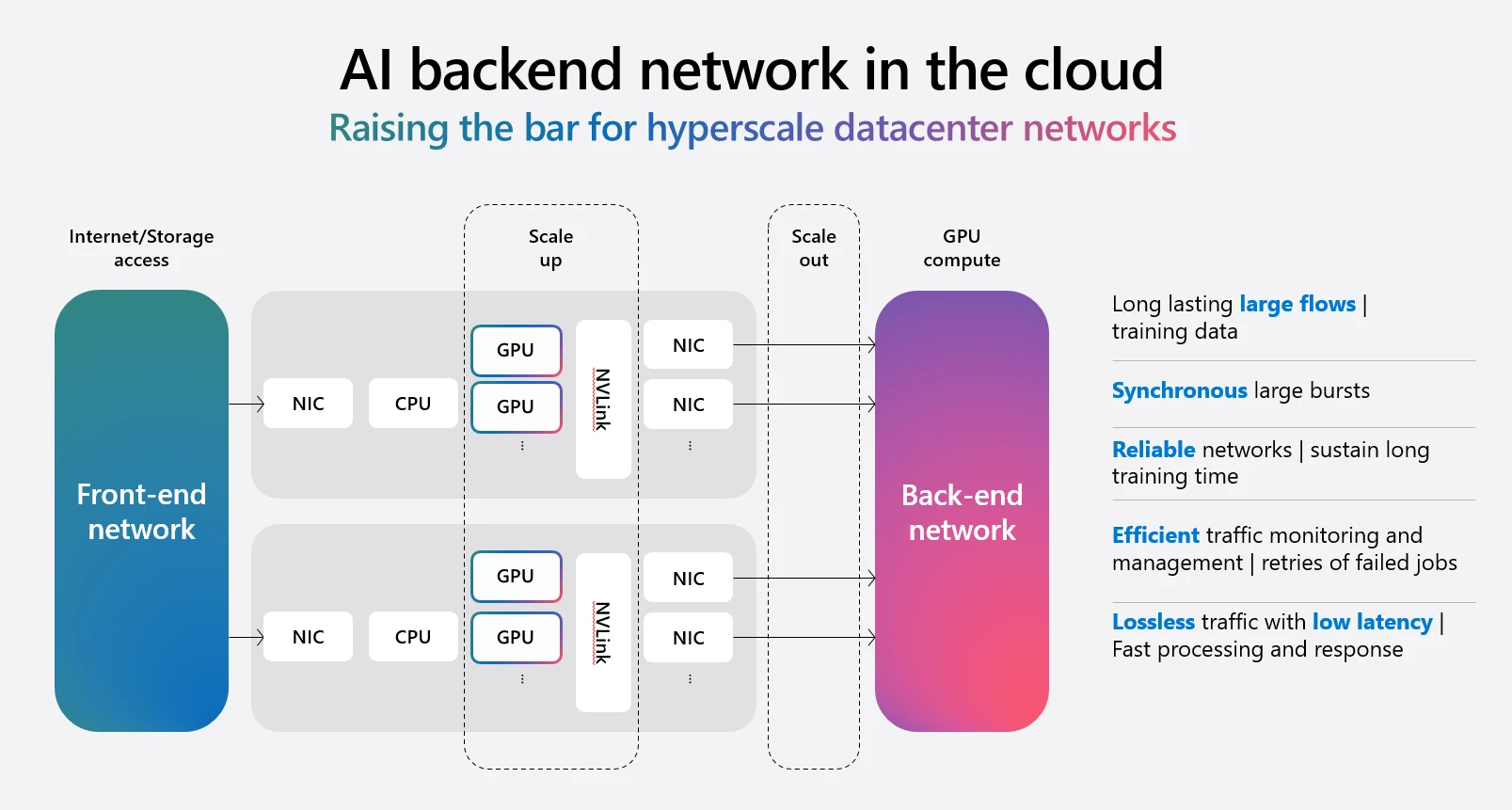

AI is not just a workload—it’s the engine driving the next generation of cloud systems. Azure’s network fabric is optimized for AI at every layer, supporting long-lasting, high-bandwidth flows for model training, low-latency intra-datacenter fabrics for GPU clusters, and secure, lossless traffic management. Azure’s architecture integrates InfiniBand and high-speed Ethernet to deliver ultra-fast, lossless data transfer between compute and storage, minimizing training times and maximizing efficiency. Azure’s network is built to support workloads with distributed GPU pools across datacenters and regions using a dedicated AI WAN. Distributed GPU clusters are connected to the services running in Azure regions via a dedicated and private connection that uses Azure Private Link and hardware based VNet appliance running high performant DPUs.

Azure Network services are designed to support users at every stage—from migrating on-premises workloads to the cloud, to modernizing applications with advanced services, to building cloud-native and AI-powered solutions. Whether it’s seamless VNet integration, ExpressRoute for private connectivity, or advanced container networking for Kubernetes, Azure provides the tools and services to connect, build, and secure the cloud of tomorrow.

Resilient by default

Resiliency is foundational to Azure Networking’s mission. We continue to execute on the goal to provide resiliency by default. In continuing with the trend of offering zone resilient SKUs of our gateways (ExpressRoute, VPN, and Application Gateway), the latest to join the list is Azure NAT Gateway. At Ignite 2025, we announced the public preview of Standard NAT Gateway V2 which offers zone redundant architecture for outbound connectivity at no additional cost. Zone Redundant NAT gateways automatically distribute traffic to available zones during an outage of a single zone. It also supports 100 Gbps of total throughput and can handle 10 million packets per second. It is IPv6 ready out of the gate and provides traffic insights with flow logs. Read the NAT Gateway blog for more information.

Pushing the boundaries on security

We continue to advance our platform with security as the top mission, adhering to the principles of Secure Future Initiatives. Along these lines, we are happy to announce the following capabilities in preview or GA:

DNS Security Policy with Threat Intel: Now generally available, this feature provides smart protection with continuous updates, monitoring, and blocking of known malicious domains.

Private Link Direct Connect: Now in public preview, this extends Private Link connectivity to any routable private IP address, supporting disconnected VNets and external SaaS providers, with enhanced auditing and compliance support.

JWT Validation in Application Gateway: Application Gateway now supports JSON Web Token (JWT) validation in public preview, delivering native JWT validation at Layer 7 for web applications, APIs, and service-to-service (S2S) or machine-to-machine (M2M) communication. This feature shifts the token validation process from backend servers to the Application Gateway, improving performance and reducing complexity. This capability enables organizations to strengthen security without adding complexity, offering consistent, centralized, secure-by-default Layer 7 controls that allow teams to build and innovate faster while maintaining a trustworthy security posture.

Forced tunneling for VWAN Secure Hubs: Forced Tunnel allows you to configure Azure Virtual WAN to inspect Internet-bound traffic with a security solution deployed in the Virtual WAN hub and route inspected traffic to a designed next hop instead of directly to the Internet. Route Internet traffic to edge Firewall connected to Virtual WAN via the default route learnt from ExpressRoute, VPN or SD-WAN. Route Internet traffic to your favorite Network Virtual Appliance or SASE solution deployed in spoke Virtual Network connected to Virtual WAN.

Providing ubiquitous scale

Scale is of utmost importance to customers looking to fine tune their AI models or low latency inferencing for their AI/ML workloads. Enhanced VPN and ExpressRoute connectivity, and scalable private endpoints further strengthen the platform’s reliability and future-readiness.

ExpressRoute 400G: Azure will be supporting 400G ExpressRoute direct ports in select locations starting 2026. Users can use multiple of these ports to provide multi-terabit throughput via dedicated private connection to on-premises or remote GPU sites.

High throughput VPN Gateway: We are announcing GA of 3x faster VPN gateway connectivity with support for single TCP flow of 5Gbps and a total throughput of 20 Gbps with four tunnels.

High scale Private Link: We are also increasing the total number of private endpoints allowed in a virtual network to 5000 and a total of 20,000 cross peered VNets.

Advanced traffic filtering for storage optimization in Azure Network Watcher: Targeted traffic logs help optimize storage costs, accelerate analysis, and simplify configuration and management.

Enhancing the experience of cloud native applications

Elasticity and the ability to scale seamlessly are essential capabilities Azure customers who deploy containerized apps expect and rely on. AKS is an ideal platform for deploying and managing containerized applications that require high availability, scalability, and portability. Azure’s Advanced Container Networking Service is natively integrated with AKS and offered as a managed networking add-on for workloads that require high performance networking, essential security and pod level observability.

We are happy to announce the product updates below in this space:

eBPF Host Routing in Advanced Container Networking Services for AKS: By embedding routing logic directly into the Linux kernel, this feature reduces latency and increases throughput for containerized applications.

Pod CIDR Expansion in Azure CNI Overlay for AKS: This new capability allows users to expand existing pod CIDR ranges, enhancing scalability and adaptability for large Kubernetes workloads without redeploying clusters.

WAF for Azure Application Gateway for Containers: Now generally available, this brings secure-by-design web application firewall capabilities to AKS, ensuring operational consistency and seamless policy management for containerized workloads.

Azure Bastion now enables secure, simplified access to private AKS clusters, reducing setup effort and maintaining isolation and providing cost savings to users.

These innovations reflect Azure Networking’s commitment to delivering secure, scalable, and future-ready solutions for every stage of your cloud journey. For a full list of updates, visit the official Azure updates page.

Get started with Azure Networking

Azure Networking is more than infrastructure—it’s the catalyst for foundational digital transformation, empowering enterprises to harness the full potential of the cloud and AI. As organizations navigate their cloud journeys, Azure stands ready to connect, secure, and accelerate innovation at every step.

All updates in one spot

From Azure DNS to Virtual Network, stay informed on what's new with Azure Networking.

Get more information here

The post Azure networking updates on security, reliability, and high availability appeared first on Microsoft Azure Blog.

Quelle: Azure