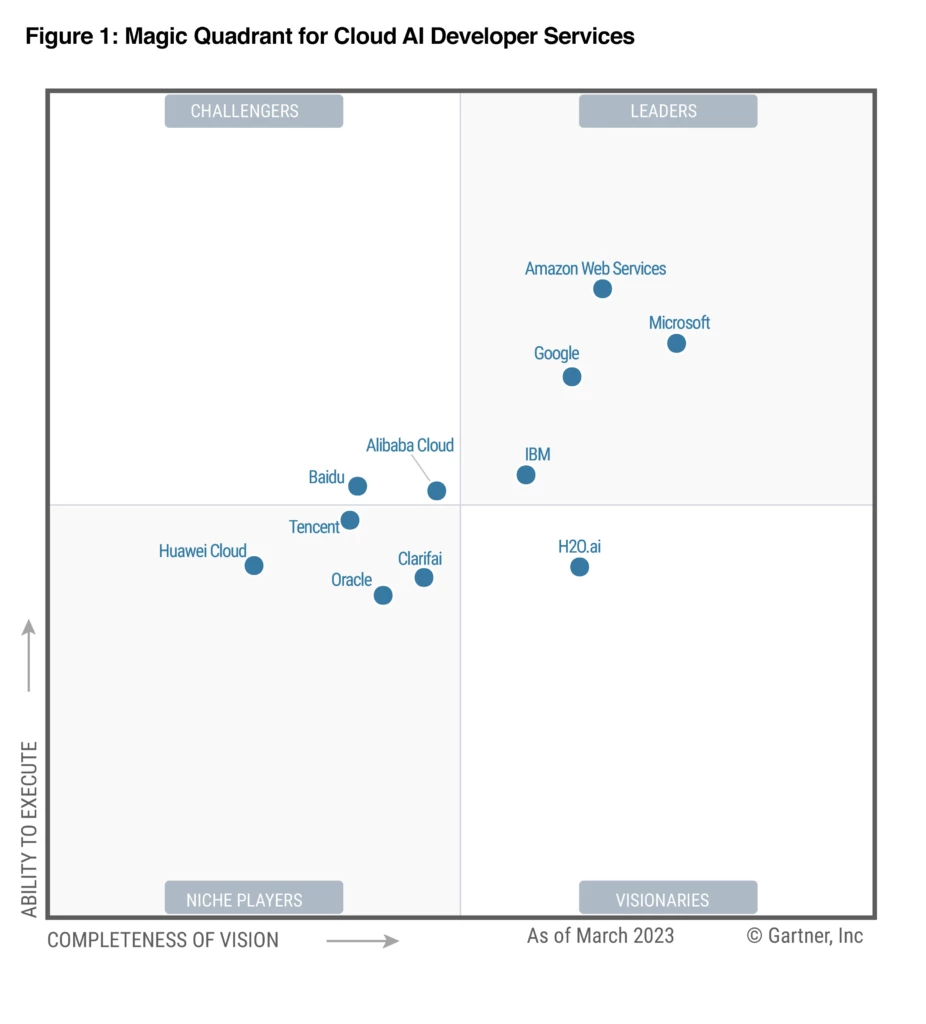

Today’s world is awash with data—ever-streaming from the devices we use, the applications we build, and the interactions we have. Organizations across every industry have harnessed this data to digitally transform and gain competitive advantages. And now, as we enter a new era defined by AI, this data is becoming even more important.

Generative AI and language model services, such as Azure OpenAI Service, are enabling customers to use and create everyday AI experiences that are reinventing how employees spend their time. Powering organization-specific AI experiences requires a constant supply of clean data from a well-managed and highly integrated analytics system. But most organizations’ analytics systems are a labyrinth of specialized and disconnected services.

And it’s no wonder given the massively fragmented data and AI technology market with hundreds of vendors and thousands of services. Customers must stitch together a complex set of disconnected services from multiple vendors themselves and incur the costs and burdens of making these services function together.

Introducing Microsoft Fabric

Today we are unveiling Microsoft Fabric—an end-to-end, unified analytics platform that brings together all the data and analytics tools that organizations need. Fabric integrates technologies like Azure Data Factory, Azure Synapse Analytics, and Power BI into a single unified product, empowering data and business professionals alike to unlock the potential of their data and lay the foundation for the era of AI.

Watch a quick overview:

What sets Microsoft Fabric apart?

Fabric is an end-to-end analytics product that addresses every aspect of an organization’s analytics needs. But there are five areas that really set Fabric apart from the rest of the market:

1. Fabric is a complete analytics platform

Every analytics project has multiple subsystems. Every subsystem needs a different array of capabilities, often requiring products from multiple vendors. Integrating these products can be a complex, fragile, and expensive endeavor.

With Fabric, customers can use a single product with a unified experience and architecture that provides all the capabilities required for a developer to extract insights from data and present it to the business user. And by delivering the experience as software as a service (SaaS), everything is automatically integrated and optimized, and users can sign up within seconds and get real business value within minutes.

Fabric empowers every team in the analytics process with the role-specific experiences they need, so data engineers, data warehousing professionals, data scientists, data analysts, and business users feel right at home.

Fabric comes with seven core workloads:

Data Factory (preview) provides more than 150 connectors to cloud and on-premises data sources, drag-and-drop experiences for data transformation, and the ability to orchestrate data pipelines.

Synapse Data Engineering (preview) enables great authoring experiences for Spark, instant start with live pools, and the ability to collaborate.

Synapse Data Science (preview) provides an end-to-end workflow for data scientists to build sophisticated AI models, collaborate easily, and train, deploy, and manage machine learning models.

Synapse Data Warehousing (preview) provides a converged lake house and data warehouse experience with industry-leading SQL performance on open data formats.

Synapse Real-Time Analytics (preview) enables developers to work with data streaming in from the Internet of Things (IoT) devices, telemetry, logs, and more, and analyze massive volumes of semi-structured data with high performance and low latency.

Power BI in Fabric provides industry-leading visualization and AI-driven analytics that enable business analysts and business users to gain insights from data. The Power BI experience is also deeply integrated into Microsoft 365, providing relevant insights where business users already work.

Data Activator (coming soon) provides real-time detection and monitoring of data and can trigger notifications and actions when it finds specified patterns in data—all in a no-code experience.

You can try these experiences today by signing up for the Microsoft Fabric free trial.

2. Fabric is lake-centric and open

Today’s data lakes can be messy and complicated, making it hard for customers to create, integrate, manage, and operate data lakes. And once they are operational, multiple data products using different proprietary data formats on the same data lake can cause significant data duplication and concerns about vendor lock-in.

OneLake—The OneDrive for data

Fabric comes with a SaaS, multi-cloud data lake called OneLake that is built-in and automatically available to every Fabric tenant. All Fabric workloads are automatically wired into OneLake, just like all Microsoft 365 applications are wired into OneDrive. Data is organized in an intuitive data hub, and automatically indexed for discovery, sharing, governance, and compliance.

OneLake serves developers, business analysts, and business users alike, helping eliminate pervasive and chaotic data silos created by different developers provisioning and configuring their own isolated storage accounts. Instead, OneLake provides a single, unified storage system for all developers, where discovery and sharing of data are easy with policy and security settings enforced centrally. At the API layer, OneLake is built on and fully compatible with Azure Data Lake Storage Gen2 (ADLSg2), instantly tapping into ADLSg2’s vast ecosystem of applications, tools, and developers.

A key capability of OneLake is “Shortcuts.” OneLake allows easy sharing of data between users and applications without having to move and duplicate information unnecessarily. Shortcuts allow OneLake to virtualize data lake storage in ADLSg2, Amazon Simple Storage Service (Amazon S3), and Google Storage (coming soon), enabling developers to compose and analyze data across clouds.

Open data formats across analytics offerings

Fabric is deeply committed to open data formats across all its workloads and tiers. Fabric treats Delta on top of Parquet files as a native data format that is the default for all workloads. This deep commitment to a common open data format means that customers need to load the data into the lake only once and all the workloads can operate on the same data, without having to separately ingest it. It also means that OneLake supports structured data of any format and unstructured data, giving customers total flexibility.

By adopting OneLake as our store and Delta and Parquet as the common format for all workloads, we offer customers a data stack that’s unified at the most fundamental level. Customers do not need to maintain different copies of data for databases, data lakes, data warehousing, business intelligence, or real-time analytics. Instead, a single copy of the data in OneLake can directly power all the workloads.

Managing data security (table, column, and row levels) across different data engines can be a persistent nightmare for customers. Fabric will provide a universal security model that is managed in OneLake, and all engines enforce it uniformly as they process queries and jobs. This model is coming soon.

3. Fabric is powered by AI

We are infusing Fabric with Azure OpenAI Service at every layer to help customers unlock the full potential of their data, enabling developers to leverage the power of generative AI against their data and assisting business users to find insights in their data. With Copilot in Microsoft Fabric in every data experience, users can use conversational language to create dataflows and data pipelines, generate code and entire functions, build machine learning models, or visualize results. Customers can even create their own conversational language experiences that combine Azure OpenAI Service models and their data and publish them as plug-ins.

Copilot in Microsoft Fabric builds on our existing commitments to data security and privacy in the enterprise. Copilot inherits an organization’s security, compliance, and privacy policies. Microsoft does not use organizations’ tenant data to train the base language models that power Copilot.

Copilot in Microsoft Fabric will be coming soon. Stay tuned to the Microsoft Fabric blog for the latest updates and public release date for Copilot in Microsoft Fabric.

4. Fabric empowers every business user

Customers aspire to drive a data culture where everyone in their organization is making better decisions based on data. To help our customers foster this culture, Fabric deeply integrates with the Microsoft 365 applications people use every day.

Power BI is a core part of Fabric and is already infused across Microsoft 365. Through Power BI’s deep integrations with popular applications such as Excel, Microsoft Teams, PowerPoint, and SharePoint, relevant data from OneLake is easily discoverable and accessible to users right from Microsoft 365—helping customers drive more value from their data

With Fabric, you can turn your Microsoft 365 apps into hubs for uncovering and applying insights. For example, users in Microsoft Excel can directly discover and analyze data in OneLake and generate a Power BI report with a click of a button. In Teams, users can infuse data into their everyday work with embedded channels, chat, and meeting experiences. Business users can bring data into their presentations by embedding live Power BI reports directly in Microsoft PowerPoint. Power BI is also natively integrated with SharePoint, enabling easy sharing and dissemination of insights. And with Microsoft Graph Data Connect (preview), Microsoft 365 data is natively integrated into OneLake so customers can unlock insights on their customer relationships, business processes, security and compliance, and people productivity.

5. Fabric reduces costs through unified capacities

Today’s analytics systems typically combine products from multiple vendors in a single project. This results in computing capacity provisioned in multiple systems like data integration, data engineering, data warehousing, and business intelligence. When one of the systems is idle, its capacity cannot be used by another system causing significant wastage.

Purchasing and managing resources is massively simplified with Fabric. Customers can purchase a single pool of computing that powers all Fabric workloads. With this all-inclusive approach, customers can create solutions that leverage all workloads freely without any friction in their experience or commerce. The universal compute capacities significantly reduce costs, as any unused compute capacity in one workload can be utilized by any of the workloads.

Explore how our customers are already using Microsoft Fabric

Ferguson

Ferguson is a leading distributor of plumbing, HVAC, and waterworks supplies, operating across North America. And by using Fabric to consolidate their analytics stack into a unified solution, they are hoping to reduce their delivery time and improve efficiency.

“Microsoft Fabric reduces the delivery time by removing the overhead of using multiple disparate services. By consolidating the necessary data provisioning, transformation, modeling, and analysis services into one UI, the time from raw data to business intelligence is significantly reduced. Fabric meaningfully impacts Ferguson’s data storage, engineering, and analytics groups since all these workloads can now be done in the same UI for faster delivery of insights.”

—George Rasco, Principal Database Architect, Ferguson

See Fabric in action at Ferguson:

T-Mobile

T-Mobile, one of the largest providers of wireless communications services in the United States, is focused on driving disruption that creates innovation and better customer experiences in wireless and beyond. With Fabric, T-Mobile hopes they can take their platform and data-driven decision-making to the next level.

“T-Mobile loves our customers and providing them with new Un-Carrier benefits! We think that Fabric’s upcoming abilities will help us eliminate data silos, making it easier for us to unlock new insights into how we show our customers even more love. Querying across the lakehouse and warehouse from a single engine—that’s a game changer. Spark compute on-demand, rather than waiting for clusters to spin up, is a huge improvement for both standard data engineering and advanced analytics. It saves three minutes on every job, and when you’re running thousands of jobs an hour, that really adds up. And being able to easily share datasets across the company is going to eliminate so much data duplication. We’re really looking forward to these new features.”

—Geoffrey Freeman, MTS, Data Solutions and Analytics, T-Mobile

Aon

Aon provides professional services and management consulting services to a vast global network of customers. With the help of Fabric, Aon hopes that they can consolidate more of their current technology stack and focus on adding more value to their clients.

“What’s most exciting to me about Fabric is simplifying our existing analytics stack. Currently, there are so many different PaaS services across the board that when it comes to modernization efforts for many developers, Fabric helps simplify that. We can now spend less time building infrastructure and more time adding value to our business.”

—Boby Azarbod, Data Services Lead, Aon

What happens to current Microsoft analytics solutions?

Existing Microsoft products such as Azure Synapse Analytics, Azure Data Factory, and Azure Data Explorer will continue to provide a robust, enterprise-grade platform as a service (PaaS) solution for data analytics. Fabric represents an evolution of those offerings in the form of a simplified SaaS solution that can connect to existing PaaS offerings. Customers will be able to upgrade from their current products into Fabric at their own pace.

Get started with Microsoft Fabric

Microsoft Fabric is currently in preview. Try out everything Fabric has to offer by signing up for the free trial—no credit card information is required. Everyone who signs up gets a fixed Fabric trial capacity, which may be used for any feature or capability from integrating data to creating machine learning models. Existing Power BI Premium customers can simply turn on Fabric through the Power BI admin portal. After July 1, 2023, Fabric will be enabled for all Power BI tenants.

Microsoft Fabric resources

If you want to learn more about Microsoft Fabric, consider:

Signing up for the Microsoft Fabric free trial.

Visiting the Microsoft Fabric website.

Reading the more in-depth Fabric experience announcement blogs:

Data Factory experience in Fabric blog

Synapse Data Engineering experience in Fabric blog

Synapse Data Science experience in Fabric blog

Synapse Data Warehousing experience in Fabric blog

Synapse Real-Time Analytics experience in Fabric blog

Power BI announcement blog

Data Activator experience in Fabric blog

Administration and governance in Fabric blog

OneLake in Fabric blog

Fabric event streams blog

Microsoft 365 data integration in Fabric blog

Dataverse and Microsoft Fabric integration blog

Exploring the Fabric technical documentation.

Reading the free e-book on getting started with Fabric.

Exploring Fabric through the Guided Tour.

Joining the Fabric community to post your questions, share your feedback, and learn from others.

The post Introducing Microsoft Fabric: Data analytics for the era of AI appeared first on Azure Blog.

Quelle: Azure