Unlock AI innovation with new joint capabilities from Microsoft and SAP

Microsoft and SAP have been partners and customers of each other for over 30 years, collaborating on innovative business solutions and helping thousands of joint customers accelerate their business transformation. Microsoft Cloud is the market leader for running SAP workloads in the cloud, including RISE with SAP, and today at SAP Sapphire 2024, we are very excited to bring more amazing innovation to the market for our joint customers.

In this blog, we explore the most recent exciting developments from the Microsoft and SAP partnership and how they help customers accelerate their business transformation.

SAP on Microsoft Cloud

Innovate with the most trusted cloud for SAP

Discover solutions

Announcing new joint AI integration between Microsoft 365 Copilot and SAP Joule

Today at SAP Sapphire, Microsoft and SAP are expanding our partnership by bringing Joule together with Microsoft Copilot into a unified experience, allowing employees to get more done in the flow of their work through seamless access to information from business applications in SAP as well as Microsoft 365.

Customers want AI assistants to carry out requests regardless of data location or the system that needs to be accessed. By integrating Joule and Copilot for Microsoft 365, the generative AI solutions will interact intuitively so users can find information faster and execute tasks without leaving the platform they are already in.

A user in Copilot for Microsoft 365 will be able to leverage SAP Joule to access information stored in SAP—for example S/4HANA Cloud, SAP SuccessFactors, or SAP Concur. Similarly, someone using SAP Joule in a SAP application will be able to use Copilot for Microsoft 365 capabilities without context switching.

To see this in action, tune into the “Innovation: The key to bringing out your best” keynote at SAP Sapphire which will also be available on-demand and read the announcement blog.

Unlock business transformation with Microsoft AI and RISE with SAP

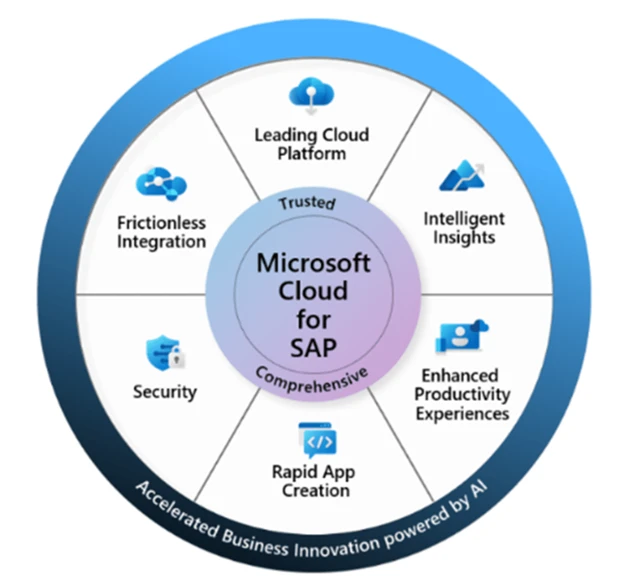

SAP systems host mission-critical data powering core business processes and can greatly benefit from the insights, automation, and efficiencies unlocked by AI. Microsoft Cloud—the most trusted, comprehensive and integrated cloud—is best positioned to help you achieve these benefits. You can extend RISE with SAP by using a broad set of Microsoft AI services to maximize business outcomes, catered to your business needs:

1: Unlocking joint AI innovation with SAP Business Technology Platform (BTP) and Microsoft Azure: SAP BTP is a platform offering from SAP that maximizes the value of the RISE with SAP offering. We recently partnered with SAP to announce the availability of SAP AI Core, an integral part of BTP, including Generative AI Hub and Joule on Microsoft Azure in West Europe, US East, and Sydney. Customers that use BTP and want to embed more intelligence into their finance, supply chain, and order to cash business processes can now do so easily, on Azure. SAP customers can also take advantage of the most popular large language models like GPT-4o available in SAP Generative AI Hub offered only on Azure through the Azure OpenAI Service.

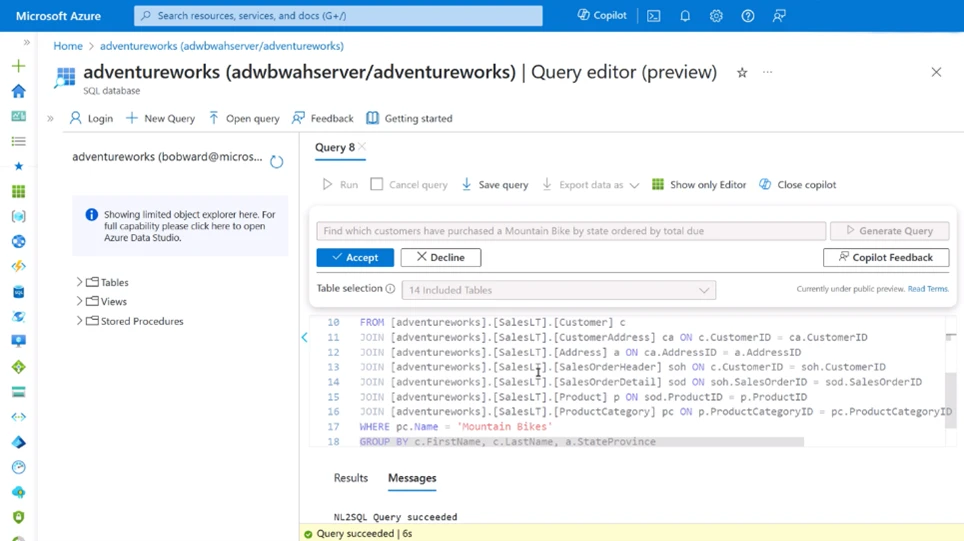

2: Unlocking end-user productivity with Microsoft Copilot: SAP customers can use Copilot for Microsoft 365, available in Microsoft Word, PowerPoint, Excel, and PowerBI so that end-users can unlock insights, and perform tasks faster. You can go one step further by tailoring Copilot to work the way you need, with your data, processes and policies using the SAP plugin in Microsoft Copilot Studio. You can now customize Copilot to connect to your SAP systems and retrieve the information you need such as expense information, inventory, and so on. This is made possible with new Copilot connectors and new agent capabilities that we announced at Microsoft Build last week.

3: Enabling AI transformation using Azure AI services: SAP customers running their workloads on Azure can build their own AI capabilities using the Azure OpenAI Service with their SAP data to quickly develop generative AI applications. We offer over 1,600 frontier and open models in Azure AI, including the latest from OpenAI, Meta, and others—providing you with the choice and flexibility to choose the model suited for your use case. More than 50,000 customers use Azure AI today, signaling the amazing momentum in this space.

A powerful productivity enhancement use case here is the combination of OpenAI, along with business process automation tools like the Microsoft Power Platform which has SAP connectors available to help a user interact with SAP data easily and complete tasks faster.

For example, a sales assistant can access SAP data to place product orders, directly from Microsoft Teams, leveraging the power of Azure OpenAI. Watch this video to see this scenario in action.

RISE with SAP customers can also consume OpenAI services through Cloud Application programming model (CAP) and SAP BTP, AI Core.

4: Automatic attack disruption for SAP, powered by AI: Microsoft Security Copilot empowers all security and IT roles to detect and address cyberthreats at machine speed, including those arising from SAP systems. For customers using Microsoft Sentinel for SAP, which is certified for RISE with SAP, attack disruption will automatically detect financial fraud techniques and disable the native SAP and connected Microsoft Entra account to prevent the cyberattacker from transferring any funds–with no additional intervention. See this video to learn more and read more about automatic attack disruption for SAP.

More choice and flexibility with expanded availability of SAP BTP on Azure

Together with SAP, we recently announced the expanded availability of SAP BTP to six new Azure regions (Brazil, Canada, India, the United Kingdom, Germany, and one to be announced) as well as additional BTP services in the existing seven public Microsoft Azure regions (Australia, Netherlands, Japan, Singapore, Switzerland, the United States East-VA, and the United States West-WA.) Upon completion, SAP Business Technology Platform will run in 13 Microsoft Azure regions, and all the key BTP services that most customers are asking for, will be available on Azure.

The expanded service availability, based on customer demand, unlocks AI innovation and business transformation for customers more easily, as you can now integrate SAP Cloud enterprise resource planning (ERP) and SAP BTP services with Microsoft Azure services, including AI services from both companies, within the same data center region.

New and powerful infrastructure options for running SAP HANA on Azure

Last year we announced the Azure M-Series Mv3 family—the next generation of memory optimized virtual machines, giving customers faster insights, more uptime, a lower total cost of ownership, and improved price-performance for running SAP HANA workloads with Azure IaaS deployments and SAP RISE on Azure. These VMs, supporting up to 32 TB of memory, are powered by the 4th generation Intel® Xeon® Scalable processors and Azure Boost, one of Azure’s latest infrastructure innovations.

Today, we are pleased to build on this investment and share that the Mv3 Very High Memory (up to 32TB of memory) offering is generally available for customers and the Mv3 High Memory offering (upto 16TB) is in preview.

Microsoft and SAP Signavio: Teaming up to accelerate transformation

Microsoft and SAP continue to collaborate to help customers in their journey to S/4HANA and RISE on Azure. SAP Signavio Process Insights is an integral part of SAP’s cloud-based process transformation suite and gives companies the ability to rapidly discover areas for improvement and automation within your SAP business processes. SAP Signavio Process Insights is now available on Microsoft Azure and provides ECC customers an accelerated path to S/4HANA, allowing customers to unlock the value of innovation through the Microsoft platform.

Simplifying business collaboration with new integrations in Microsoft Teams

Microsoft and SAP have been working together for several years enabling organizations and their employees improve productivity through collaborative experiences that combine mission critical data from SAP with Microsoft Teams and Microsoft 365.

Today, we are excited to build on this and announce new joint capabilities with exciting updates to Microsoft Teams apps for SAP S/4HANA, SAP SuccessFactors and, SAP Concur:

Upcoming features in S/4HANA app for Microsoft Teams

S/4HANA Copilot plugin: Users can access S/4HANA sales details, order status, sales quotes, and more using natural language with Copilot for Microsoft 365. See a sample query below:

Adaptive Card Loop components: Share intelligent cards in Teams and Outlook (available for pre-release users only).

Teams search-based message extension: Users can quickly search for and insert information from S/4HANA without leaving the Teams environment.

SAP Community search: Search SAP Community and share content with co-workers in Microsoft Teams—without having to leave the app. While chatting with a colleague or a group, click on the three dots below the text field, open the SAP S/4HANA app, and enter your search term in the popup window. From the results list, pick the topic you want to share and directly send it to your colleagues.

Share to Microsoft Teams as Card: Communicate better with your co-workers using Microsoft Teams by providing a collaborative view that shows application content in a new window and enables you to have a meaningful conversation.

Access S/4HANA within Microsoft 365 Home and Outlook too:

New release: SuccessFactors for Microsoft Teams

HR Task Reminders through Teams Chatbot: Receive a private message from the SuccessFactors Teams chatbot, which can help you complete HR tasks directly in Teams or guide you to SuccessFactors online for more complex workloads.

Trigger Quick Actions through Commands: Request and provide feedback to your colleagues, manage time entries, view learning assignments and approvals, access employee and manager self-services, and much more!

Coming soon: New SuccessFactors Dashboard in Teams tab.

Coming soon: Concur Travel and Expense

Users will be to able to share travel itineraries and expense reports with colleagues in Microsoft Teams. This app will be released later this summer.

Microsoft and SAP collaborate to modernize identity for SAP customers

Earlier this year, we announced that we are collaborating with SAP to develop a solution that enables customers to migrate their identity management scenarios from SAP Identity Management (IDM) to Microsoft Entra ID. We’re excited to announce that guidance for this migration will be available soon.

Driving joint customer success

It’s super exciting to see all the product innovation that will ultimately drive success and business outcomes for customers. Microsoft was one of the early adopters of RISE with SAP internally, and is proud to have helped thousands of customers accelerate their business transformation to RISE with SAP with the power of the Microsoft Cloud.

Construction industry supplier Hilti Group migrated its massive SAP landscape to RISE with SAP on Microsoft Azure, accelerating their continuous innovation roadmap. In parallel, it upgraded from a 12-terabyte to a 24-terabyte SAP S/4HANA ERP application and is about to shut down its on-premises datacenter to make Azure its sole platform. Hilti wanted to be one of the first adopters of the RISE with SAP offering, which brings project management, technical migration, and premium engagement services together in a single contract. The accelerated, on-demand business transformation solution was the perfect match to help evolve the company’s massive SAP landscape, which serves as the backbone of the company’s transactional business.

“RISE with SAP on Azure helped us move our experts and resources into areas where they can add the most value, which was a game-changer.”

Dr. Christoph Baeck, Head of IT Platforms, Hilti Group

Tokyo-based steel manufacturer, JFE Steel Corporation wanted to upgrade it’s on-premises SAP systems to a hybrid cloud strategy to pursue better digital experiences. The company chose S/4 HANA Cloud private edition—which provides the SAP SaaS solution, RISE, in a private cloud environment, and Microsoft Azure was chosen as the foundation for this. They achieved the migration of their SAP system to the cloud in just seven months and are also driving further innovation with the Microsoft Power Platform.

“We considered on-premises and various cloud services based on the three axes of quality, cost, and turnaround time. Azure was the first choice because we had confidence in its quality, and we had accumulated know-how in the company. We also actively use Microsoft Power Platform and other products, and we appreciated the high degree of affinity and integration between the products.”

Mr. Etsuo Kasuya, JFE Systems, Inc. Tokyo Office Business Management System Development Department Accounting Group

Australian mining and metals company South32 set its goal of transitioning its more than 100 terabyte data landscape into a fit-for-purpose ERP system. South32 seamlessly completed phase one of its SAP migration to Azure, achieving consolidation and simplification of its estate and building a scalable, easy to manage system environment using SAP S/4HANA with RISE on Azure by working with SAP, Microsoft and key partner TCS.

“Now that we’ve moved our SAP landscape to Azure, we have more breadth of coverage. Our environments are standardized, which provides our infrastructure team with much better tools to manage consumption and give us transparency around costs.”

Stuart Munday, Group Manager, ERP, South32

Learn more

Microsoft and SAP are committed to continuing our partnership to serve our joint customers and enable their growth and transformation as well as unlock innovation for them in the era of AI. There are several ways you can learn more and engage with us:

Visit us at SAP Sapphire this week: If you are at SAP Sapphire this week in Orlando or the next week in Barcelona, visit the Microsoft Booth to learn about the exciting announcements. Also, for the latest product updates from Sapphire, check out our engineering blog.

Learn more on our website: To learn more about why the Microsoft Cloud is the leading cloud platform for SAP workloads, including RISE with SAP, visit our website.

Read more about how customers are unlocking AI innovation and business transformation with SAP and the Microsoft Cloud.

Migration offers and incentives: Beyond the announcements we are making today, we also offer programs, and incentives so you can make your migration decisions with confidence The Azure Migrate and Modernize offering gives you guidance, expert help, and funding to streamline your move to Azure for SAP workloads, including RISE with SAP.

Skilling: Business leaders often share with us that skilling for their teams is top of mind so that the organization can better prepare itself for the cloud journey. We offer several online learning paths as well as instructor-led offerings so you can maximize the value of your migration to the cloud—learn more.

The post Unlock AI innovation with new joint capabilities from Microsoft and SAP appeared first on Azure Blog.

Quelle: Azure