AI-powered dialogues: Global telecommunications with Azure OpenAI Service

In an era where digital innovation is king, the integration of Microsoft Azure OpenAI Service is cutting through the static of the telecommunications sector. Industry leaders like Windstream, AudioCodes, AT&T, and Vodafone are leveraging AI to better engage with their customers and streamline their operations. These companies are pioneering the use of AI to not only enhance the quality of customer interactions but also to optimize their internal processes—demonstrating a unified vision for a future where digital and human interactions blend seamlessly.

Azure OpenAI Service

Build your own copilot and generative AI applications

Explore our solutions

Leveraging Azure OpenAI Service to enhance communication

Below we look at four companies who have strategically adopted Azure OpenAI Service to create more dynamic, efficient, and personalized communication methods for customers and employees alike.

1. Windstream’s AI-powered transformation streamlines operational efficiencies: Windstream sought to revolutionize its operations, enhancing workflow efficiency and customer service.

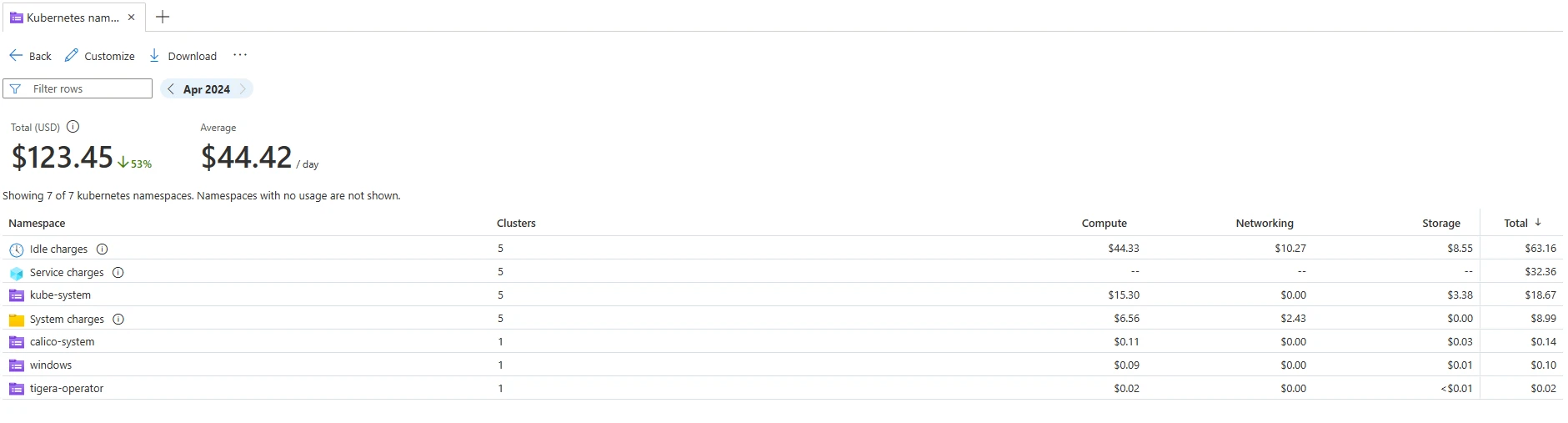

Windstream streamlined workflows and improved service quality by analyzing customer calls and interactions with AI-powered analytics, providing insights into customer sentiments and needs. This approach extends to customer communications, where technical data is transformed into understandable outage notifications, bolstering transparency, and customer trust. Internally, Windstream has capitalized on AI for knowledge management, creating a custom-built generative pre-trained transformer (GPT) platform within Microsoft Azure Kubernetes Service (AKS) to index and make accessible a vast repository of documents, which enhances decision-making and operational efficiency across the company. The adoption of AI has facilitated rapid, self-sufficient onboarding processes, and plans are underway to extend AI benefits to field technicians to provide real-time troubleshooting assistance through an AI-enhanced index of technical documents. Windstream’s strategic focus on AI underscores the company’s commitment to innovation, operational excellence, and superior customer service in the telecommunications environment.

Azure marketplace

Browse cloud software

2. AT&T automates for efficiency and connectivity with Azure OpenAI Service: AT&T sought to boost productivity, enhance the work environment, and reduce operational costs.

AT&T is leveraging Azure OpenAI Service to automate business processes and enhance both employee and customer experiences, aligning with its core purpose of fostering connections across various aspects of life including work, health, education, and entertainment. This strategic integration of Azure and AI technologies into their operations allows the company to streamline IT tasks and swiftly respond to basic human resources inquiries. In its quest to become the premier broadband provider in the United States and make the internet universally accessible, AT&T is committed to driving operational efficiency and better service through technology. The company is employing Azure OpenAI Service for various applications, including assisting IT professionals in managing resources, facilitating the migration of legacy code to modern frameworks to spur developer productivity, and enabling employees to effortlessly complete routine human resources tasks. These initiatives allow AT&T staff to concentrate on more complex and value-added activities, enhancing the quality of customer service. Jeremy Legg, AT&T’s Chief Technology Officer, highlights the significance of automating common tasks with Azure OpenAI Service, noting the potential for substantial time and cost savings in this innovative operational shift.

3. Vodafone revolutionizes customer service with TOBi and Microsoft Azure AI: Vodafone sought to lower development costs, quickly enter new markets, and improve customer satisfaction with more accurate and personable interactions.

Vodafone, a global telecommunications giant, has embarked on a digital transformation journey, central to which is the development of TOBi, a digital assistant created using Azure services. TOBi, designed to provide swift and engaging customer support, has been customized and expanded to operate in 15 languages across multiple markets. This move not only accelerates Vodafone’s ability to enter new markets but also significantly lowers development costs and improves customer satisfaction by providing more accurate and personable interactions. The assistant’s success is underpinned by Azure Cognitive Services, which enables it to understand and process natural language, making interactions smooth and intuitive. Furthermore, Vodafone’s initiative to leverage the new conversational language understanding feature from Microsoft demonstrates its forward-thinking approach to providing multilingual support, notably in South Africa, where TOBi will soon support Zulu among other languages. This expansion is not just about broadening the linguistic reach but also about fine-tuning TOBi’s conversational abilities to recognize slang and discern between similar requests, thereby personalizing the customer experience.

4. AudioCodes leverages Microsoft Azure for enhanced communication: AudioCodes sought streamlined workflows, improved service level agreements (SLAs), and increased visibility.

AudioCodes, a leader in voice communications solutions for over 30 years, migrated its solutions to Azure for faster deployment, reduced costs, and improved SLAs. The result? The company’s ability to serve its extensive customer base, which includes more than half of the Fortune 100 enterprises. The company’s shift towards managed services and the development of applications aimed at enriching customer experiences is epitomized by AudioCodes Live, designed to facilitate the transition to Microsoft Teams Phone. AudioCodes has embraced cloud technologies, leveraging Azure services to streamline telephony workflows and create advanced applications for superior call handling, such as its Microsoft Teams-native contact center solution, Voca. By utilizing Azure AI and AI, Voca offers enterprises robust customer interaction capabilities, including intelligent call routing and customer relationship management (CRM) integration. AudioCodes’ presence on Azure Marketplace has substantially increased its visibility, generating over 11 million usage hours a month from onboarded customers. The company plans to utilize Azure OpenAI Service in the future to bring generative AI capabilities into its solutions.

The AI enhanced future of global telecommunications

The dawn of a new era in telecommunications is upon us, with industry pioneers like Windstream, AudioCodes, AT&T, and Vodafone leading the charge into a future where AI and Azure services redefine the essence of connectivity. Their collective journey not only highlights a shared commitment to enhancing customer experience and operational efficiency, but also paints a vivid picture of a world where communication transcends traditional boundaries, enabled by the fusion of cloud infrastructure and advanced AI technologies. This visionary approach is laying the groundwork for a paradigm where global communication is more seamless, intuitive, and impactful, demonstrating the unparalleled potential of AI to weave a more interconnected and efficient fabric of global interaction.

Our commitment to responsible AI

empowering responsible ai practices

Read the latest

With responsible AI tools in Azure, Microsoft is empowering organizations to build the next generation of AI apps safely and responsibly. Microsoft has announced the general availability of Azure AI Content Safety, a state-of-the art AI system that helps organizations keep AI-generated content safe and create better online experiences for everyone. Customers—from startup to enterprise—are applying the capabilities of Azure AI Content Safety to social media, education, and employee engagement scenarios to help construct AI systems that operationalize fairness, privacy, security, and other responsible AI principles.

Get started with Azure OpenAI Service

Apply for access to Azure OpenAI Service by completing this form.

Learn about Azure OpenAI Service and the latest enhancements.

Get started with GPT-4 in Azure OpenAI Service in Microsoft Learn.

Read our partner announcement blog, empowering partners to develop AI-powered apps and experiences with ChatGPT in Azure OpenAI Service.

Learn how to use the new Chat Completions API (in preview) and model versions for ChatGPT and GPT-4 models in Azure OpenAI Service.

Learn more about Azure AI Content Safety.

The post AI-powered dialogues: Global telecommunications with Azure OpenAI Service appeared first on Azure Blog.

Quelle: Azure