By Jianing Guo, Product Manager

Containerized workloads have gained in popularity over the past few years for enterprises and startups alike. Containers can significantly improve development speed, lower costs by improving resource utilization, and improve production consistency; however, their unique security implications in comparison to traditional VM-based applications are often not well understood. At Google, we’ve been running container-based production infrastructure for more than a decade and want to share our perspective on how container security compares to traditional applications.

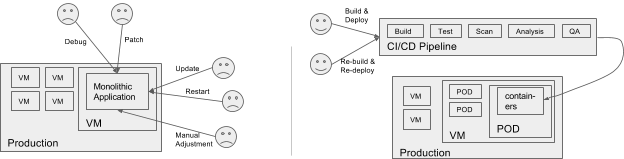

Containerized workloads differ from traditional applications in several major ways. They also provide a number of advantages:

Modularized applications (monolithic applications vs. microservices)

Lower release overhead (convenient packaging format and well defined CI/CD practices)

Shorter lifetimes, less risk to have outdated packages (months to years vs. days to hours)

Less drift from original state during runtime (less direct access for maintenance, since workload is short-lived and can easily be rebuilt and re-pushed)

Now let’s examine how these differences can affect various aspects of security.

Understanding the container security boundary

The most common misconception about container security is that containers should act as security boundaries just like VMs, and as they are not able to provide such guarantee, they are a less secure deployment option. However, containers should be viewed as a convenient packaging and delivering mechanism for applications, rather than as mini VMs.

In the same way that traditional applications are not perfectly isolated from one another within a VM, an attacker or rogue program could break out of a running container and gain control of other containers running on the same VM. However, with a properly secured cluster, a container breakout would require an unpatched vulnerability in the kernel, in the common container infrastructure (e.g., docker), or in other services exposed to the workload from the VM. To help reduce the risk of these attacks Google Container Engine provides fully managed nodes and actively monitors for vulnerabilities and outdated packages in the VM — including third party add-ons — and performs auto update and auto repair when necessary. This helps minimize the attack window for a container breakout when a new vulnerability is discovered.

A properly secured and updated VM provides process level isolation that applies to both regular applications as well as container workloads, and customers can use Linux security modules to further restrict a container’s attack surface. For example, Kubernetes, an open source production-grade container orchestration system, supports native integration with AppArmor, Seccomp and SELinux to impose restrictions on syscalls that are exposed to containers. Kubernetes also provides additional tooling to further support container isolation. PodSecurityPolicy allows customers to impose restriction on what a workload can do or access at the Node level. For particularly sensitive workloads that require VM level isolation, customers can use taint and toleration to help ensure only workloads that trust each other are scheduled on the same VM.

Ultimately, in the case of applications running in both VMs and containers, the VM provides the final security barrier. Just like you wouldn’t run programs with mixed security levels on the same VM, you shouldn’t run pods with mixed security levels on the same node due to the lack of guaranteed security boundaries between pods.

Minimizing outdated packages

One of the most common attack vectors for applications running in a VM is vulnerabilities in outdated packages. In fact, 99.9% of exploited vulnerabilities are compromised more than a year after the CVE was published (Verizon Data Breach Investigation Report, 2015). With monolithic applications, application maintainers often patch OSes and applications manually and VM-based workloads often run for an extended period of time before they’re refreshed.

In the container world, microservices and well defined CI/CD pipelines make it easier to release more frequently. Workloads are typically short-lived (days or even hours), drastically reducing the attack surface for outdated application packages. Container Engine’s host OS is hardened and updated automatically. Further, for customers who adopt fully managed nodes, the guest OS and system containers are also patched and updated automatically, which helps to further reduce the risk from known vulnerabilities.

In short, containers go hand in hand with CI/CD pipelines that allow for very regular releases and update the containers with the latest patches as frequently as possible.

Towards centralized governance

One of the downsides of running traditional applications on VMs is that it’s nearly impossible to understand exactly what software is running in your production environment, let alone control exactly what software is being deployed. This is a result of three primary root causes:

The VM is an opaque application packaging format, and it’s hard to establish a streamlined workflow to examine and catalog its content prior to deployment

VM image management is not standardized or widely adopted, and it’s often hard to track down every image that has ever been deployed to a project

Due to VM workloads’ long lifespans, administrators must frequently manipulate running workloads to update and maintain both the applications and the OS, which can cause significant drift from the application’s original state when it was deployed

And because it’s hard to determine the accurate states of traditional applications at scale, the typical security controls will approximate by focusing on anomaly detection in application and OS behaviors and settings.

In contrast, containers provide a more transparent, easy-to-inspect and immutable format for packaging applications, making it easy to establish a workflow to inspect and catalog container content prior to deployment. Containers also come with a standardized image management mechanism (a centralized image repository that keeps track of all versions of a given container). And because containers are typically short-lived and can easily be rebuilt and re-pushed, there’s typically less drift of a running container from its deploy-time state.

These properties help turn container dev and deploy workflows into key security controls. By making sure that only the right containers built by the right process with the right content are deployed, organizations can gain control and knowledge of exactly what’s running in their production environment.

Shared security ownership

In some ways, traditional VM-based applications offer a simpler security model than containerized apps. Their runtime environment is typically created and maintained by a single owner, and IT maintains total control over the code they deploy to production. Infrequent and drawn-out releases also mean that centralized security teams can examine every production push in detail.

Containers, meanwhile, enable agile release practices that allow faster and more frequent pushes to production, leaving less time for centralized security reviews, and shifting the responsibility for security back to developers.

To mitigate the risks introduced by faster development and decentralized security ownership, organizations adopting containers should also adopt best practices highlighted in the previous section such as having a private registry to centrally control external dependencies in a production deployment (e.g., open-source base images); image scanning as part of CI/CD process to identify vulnerabilities and problematic dependencies; and deploy-time controls to help ensure only known good software gets deployed to production.

Overall, an automated and streamlined secure software supply chain that ensures software quality and provenance can provide significant security advantages and can still incorporate periodic manual review.

Summary

While many of the security limitations of VM-based applications hold true for containers (for now), using containers for application packaging and deployment creates opportunities for more accurate and streamlined security controls.

Watch this space for future posts that dig deep on containers, security and effective software development teams.

Visit our webpage to learn more about the Google Cloud Platform (GCP) security model.

Quelle: Google Cloud Platform

Published by