Announcing Azure Stream Analytics on edge devices (preview)

Today, we are announcing Azure Stream Analytics (ASA) on edge devices, a new feature of Azure Stream Analytics that enables customers to deploy analytical intelligence closer to the IoT devices and unlock the full value of the device-generated data.

Azure Stream Analytics on edge devices extends all the benefits of our unique streaming technology from the cloud down to devices. With ASA on edge devices, we are offering the power of our Complex Event Processing (CEP) solution on edge devices to easily develop and run real-time analytics on multiple streams of data. One of the key benefit of this feature is the seamless integration with the cloud: users can develop, test, and deploy their analytics from the cloud, using the same SQL-like language for both cloud and edge analytics jobs. Like in the cloud, this SQL language notably enables temporal-based joins, windowed aggregates, temporal filters, and other common operations such as aggregates, projections, and filters. Users can also seamlessly integrate custom code in JavaScript for advanced scenarios.

Enabling new scenarios

Azure IoT Hub, a core Azure service that connects, monitors and updates IoT devices, has enabled customers to connect millions of devices to the cloud, and Azure Stream Analytics has enabled customers to easily deploy and scale analytical intelligence in the cloud for extracting actionable insights from the device-generated data. However, multiple IoT scenarios require real-time response, resiliency to intermittent connectivity, handling of large volumes of raw data, or pre-processing of data to ensure regulatory compliance. All of which could now be achieved by using ASA on edge device to deploy and operate analytical intelligence physically closer to the devices.

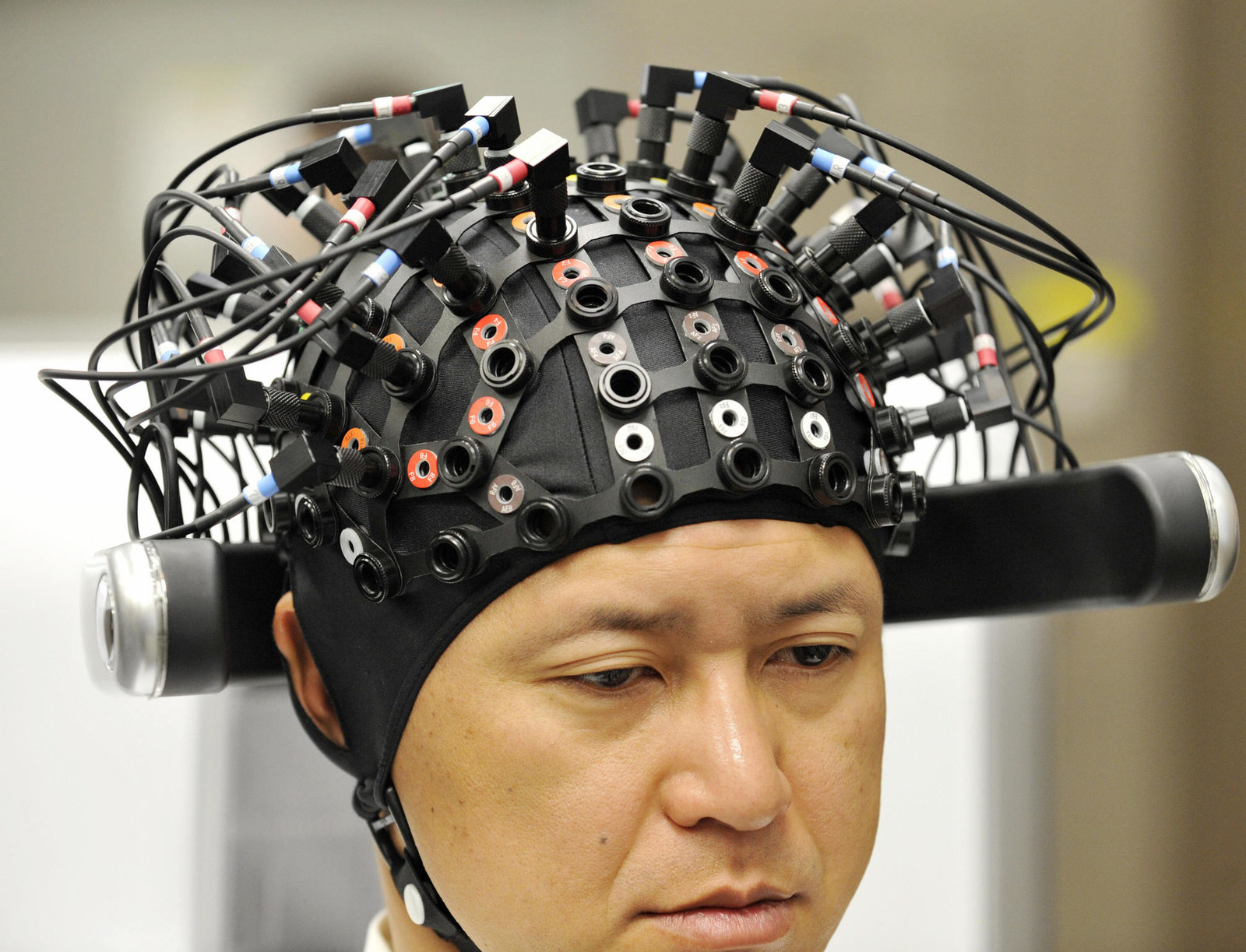

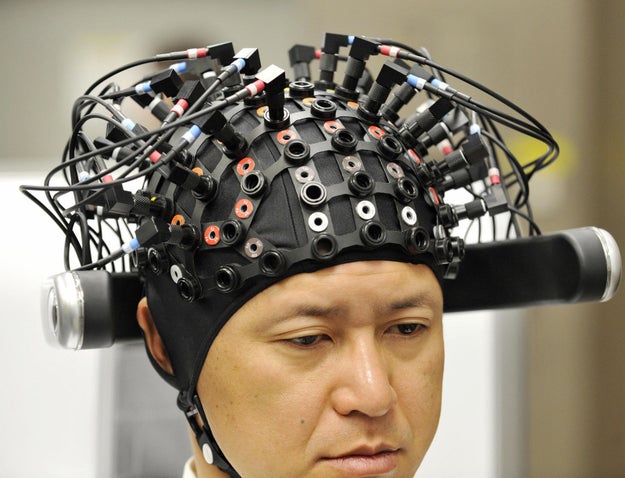

Hewlett Packard Enterprise (HPE) is an early preview partner who has demonstrated a working prototype of ASA on edge devices at Microsoft's booth at Hannover Messe (April 24 to 28, Hall 7, Stand C40). A result of close collaboration between Microsoft, HPE and the OPC Foundation, the prototype is based on Azure Stream Analytics, the HPE Edgeline EL1000 Converged Edge System, and the OPC Unified Architecture (OPC-UA), delivering real-time analysis, condition monitoring, and control. The HPE Edgeline EL1000 Converged Edge System integrates compute, storage, data capture, control and enterprise-class systems and device management built to thrive in hardened environments and handle shock, vibration and extreme temperatures.

ASA on edge devices is particularly interesting for Industrial IoT (IIoT) scenarios that require reacting to operational data with ultra-low latency. Systems such as manufacturing production lines or remote mining equipment need to analyze and act in real-time to the streams of incoming data, e.g. when anomalies are detected.

In offshore drilling, offshore windfarms, or ship transport scenarios, analytics need to run even when internet connectivity is intermittent. In these cases, ASA on edge devices can run reliably to summarize and monitor events, react to events locally, and leverage connection to the cloud when it becomes available.

In industrial IoT scenarios, the volume of data can be too large to be sent to the cloud directly due to limited bandwidth or bandwidth cost. For example, the data produced by jet engines (a typical number is that 1TB of data is collected during a flight) or manufacturing sensors (each sensor can produce 1MB/s to 10MB/s) may need to be filtered down, aggregated or processed directly on the device before sending it to the cloud. Examples of these processes include sending only events when values change instead of sending every event, averaging data on a time window, or using a user-defined function.

Until now, customers with such requirements had to build custom solutions, and manage them separately from their cloud applications. Now, customers can use Azure Stream Analytics to seamlessly develop and operate their stream analytics jobs both on edge devices and in the cloud.

How to use Azure Stream Analytics on edge devices?

Azure Stream Analytics on edge devices leverages the Azure IoT Gateway SDK to run on Windows and Linux operating systems, and supports a multitude of hardware as small as single-board computers, to full PCs, servers or dedicated field gateways devices. The IoT Gateway SDK provides connectors for different industry standard communication protocols such as OPC-UA, Modbus and MQTT and can be extended to support your own communication needs. Azure IoT Hub is used to provide secured bi-directional communications between gateways and the cloud.

Azure Stream Analytics on edge devices is available now in private preview. To request access to the private preview, click here.

You can also meet with our team at Hannover Messe, the world&039;s biggest industrial fair, which take place from April 24th to April 28th in Hannover, Germany. We are located at the Microsoft booth in the Advanced Analytics pod (Hall 7, Stand C40).

Quelle: Azure