Ariel Davis / BuzzFeed News

On July 3, Maggie H. opened up her Twitter mentions and found her face photoshopped into the crosshairs of a gunsight. The image was a screengrab of her Twitter profile page, taken by a user she had blocked. It showed her face directly in the center of a target above a caption that read, “@[username redacted] BTFO by cantbeatkevin kill #390 #noscope” (BTFO is shorthand for “blown the fuck out”).<br /></p><p>Harassment on Twitter was nothing new for Maggie, but this latest threat unnerved her. One day before receiving the photoshopped target image, Maggie had argued with a Twitter troll account by the name of @LowIQCrazyMika. After a contentious back-and -forth, @LowIQCrazyMika tweeted that they’d found Maggie’s Facebook account. A subsequent tweet named the small, rural town in which Maggie lives: “Youre [sic] speaking like the child of an insestual relationship from the remote woods of [town name redacted].”</p><p>Maggie accused the account of stalking her and filed an abuse report to Twitter. Shortly after that, she received the photoshop of her inside the target from a different user. The tweet was retweeted by <b>LowIQCrazyMika. Maggie filed an abuse report for this tweet as well.

Four days after filing the first report, Maggie received a form email from Twitter. It said @LowIQCrazyMika had not violated Twitter’s rules by alluding to her location.

“I'm scared and there's no accountability.”

“I'm scared and there's no accountability,” Maggie told BuzzFeed News. “I even sent Twitter support a copy of my license with an explanation that tweeting that I live in a small town is akin to giving away my exact location, and they're not doing anything.” On July 7, BuzzFeed News contacted Twitter about Maggie’s harassment reports. Twitter declined comment, citing its policy of not commenting on individual accounts. But soon after the tweet with Maggie’s face inside a gun target disappeared, the account that broadcast it was suspended, and Maggie received an email from Twitter noting the company had taken action.

Though the suspension ultimately granted Maggie some peace of mind, her process of getting justice is one of many examples that show a frustrating pattern for victims — one in which Twitter is slow or unresponsive to harassment reports until they’re picked up by the media.

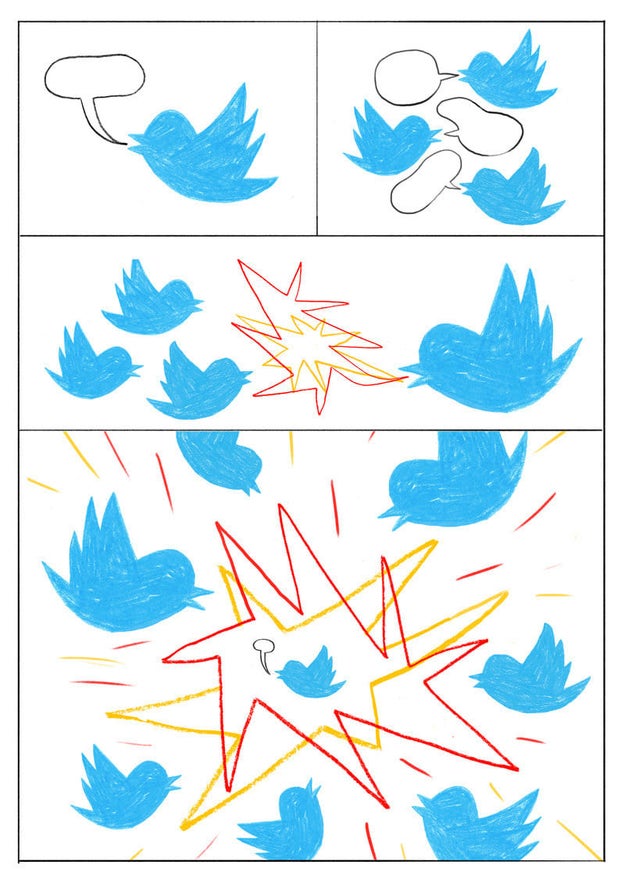

After a decade-long failure to effectively address harassment on its platform, Twitter has finally begun making efforts to curb its abuse problem. Last November it rolled out a keyword filter and a mute tool for conversation threads, as well as a “hateful conduct” report option. In February, the company made changes to its timeline and search designed to hide “potentially abusive or low-quality” tweets, and added a policy update intended to crack down on abusive accounts from repeat offenders. Just last week, Twitter rolled out a few more muting tools for users, including the ability to mute new (formerly known as egg) accounts, as well as accounts that don't follow you.

And yet targeted harassment of the sort Maggie experienced continues. That may be because Twitter’s recent abuse prevention controls are a largely cosmetic solution to a systemic problem. And Twitter’s inconsistent enforcement of harassment reports suggest that perhaps the company’s algorithmic moderation systems simply aren’t as effective as the company would like to think. It’s in these situations that Maggie — and others — have looked for a third party to intervene.

“Twitter isn't taking this problem seriously at all,” Maggie told BuzzFeed News after her troll’s account was suspended. “Can you please help me hold them accountable?” she wrote.

There are no shortage of examples of this pattern. In late June, a BuzzFeed engineer stumbled across a tweet that read “if BuzzFeed headquarters was destroyed in an explosion and every one that worked there was all of a sudden dead, that’d be a good thing.” Five days after reporting the tweet, the engineer received a form email from Twitter stating that the tweet didn’t violate the company’s terms of service. When a BuzzFeed News reporter asked Twitter why this was the case, the tweet was flagged as a violation and removed. Twitter did not explain why the tweet was initially dismissed as not in violation of Twitter’s terms of service.

Twitter has long been criticized for being slow to respond to incidents of abuse on its platform unless they go viral or are flagged by reporters or celebrities. In August of 2016, Twitter told software engineer Kelly Ellis that a string of 70 tweets calling her a “psychotic man hating ‘feminist’” and wishing that she’d be raped did not violate company rules forbidding “targeted abuse or harassment of others.” Shortly after BuzzFeed News published a report on the tweets, however, they were taken down. There were also multiple other instances of tweets being removed only after reports from BuzzFeed News and other outlets.

Given Twitter’s size and the volume of tweets it broadcasts — estimates are old but one report from an app developer in early 2016 clocked over 303 million tweets per day — the social network could never review each and every suspicious tweet with a human eye. But Twitter’s history of opaque protocols for addressing abuse casts its inaction in a different light. In September, a BuzzFeed News survey of over 2,700 users found that 90% of respondents said that Twitter didn’t do anything when they reported abuse. As one victim of serial harassment told BuzzFeed News following the survey, “It only adds to the humiliation when you pour your heart out and you get an automated message saying, ‘We don’t consider this offensive enough.’”

But even with a sharper focus on abuse in 2017, a concerning number of reports of clear-cut harassment still seem to slip through the cracks and return a form email telling the victims that their case did not rise to a level Twitter considers to be a violation of its terms of service. In a cursory search over the last seven months, BuzzFeed News turned up 27 examples of clear rules violations — including the unauthorized publishing of personal information (such as addresses and screenshots of apartment buildings) of journalists, threats of physical violence, and extensive, targeted harassment — that were met with a “did not violate” response from the social network. Similarly, during an open call on Twitter for examples of clear harassment that were dismissed by the company, this writer received 89 direct messages from users alleging that they received at least one improper dismissal of their harassment claim. In more than half of these cases, the users provided BuzzFeed News with a screenshot of the form email they received from the company.

In a number of examples provided to BuzzFeed News, the harassment that was dismissed by Twitter was hardly subtle. One female sportswriter provided seven dismissed harassment reports from Twitter. One account she reported has only posted 78 tweets — all directed at the sportswriter in order to troll her. Another of the accounts she reported uses her likeness in its profile picture. Each and every tweet from the account that BuzzFeed News viewed mocks her or those close to her, including crass tweets about family members transitioning, and harassing tweets about children.

Twitter’s hateful conduct policy explicitly states that “we also do not allow accounts whose primary purpose is inciting harm towards others.” Despite her report to Twitter and a request from BuzzFeed News for Twitter to explain why the account is not in violation of Twitter’s rules, however, the account is still up. All of the accounts the sportswriter reported and shared with BuzzFeed News are still active.

Numerous other accounts reported for serial harassment are still active. @Trap4Von, for example, persists despite a number of abusive tweets including statements like, “i'll follow you home an [sic] leave my children inside of you.” Twitter’s hateful conduct policy explicitly forbids “violent threats,” noting that “you may not promote violence against or directly attack or threaten other people.”

Twitter did not respond to the 27 explicit examples of harassment on which BuzzFeed News requested comment. The company did, however, provide a statement via a spokesperson:

Twitter has undertaken a number of updates, through both our technology and human review, to reduce abusive content and give people tools to have more control over their experience on Twitter. We've also been working hard to communicate with our users more transparently about safety. We are firmly committed to continuing to improve our tools and processes, and regularly share updates at @TwitterSafety. We urge anyone who is experiencing or witnessing abuse on Twitter to report potential violations through our tools so we can evaluate as quickly as possible and remove any content that violates Twitter user rules.

But despite Twitter’s renewed commitment to anti-abuse tools, repeat victims of harassment are frustrated that Twitter’s harassment workflow often requires a cheat code of media involvement.

“There's no way to appeal to them and tell them why they got the decision not to remove tweets wrong, so people who are threatened basically have no choice but to go to someone with a bigger platform,” Maggie told BuzzFeed News.

Kelly Ellis — the software engineer — echoed Maggie’s frustration. “I often feel like, how are other users supposed to escalate stuff,” she said in a recent email. As a victim of continued harassment, Ellis has looked not only to the media, but to Twitter employees.

“I will even sometimes DM people I know there,” Ellis said. “One case that happens pretty frequently is if someone is harassing me with multiple accounts and all the reports will come back as Twitter saying it's not abusive. But then I talk to a friend at Twitter who says it definitely is and helps get it taken care of for me. There's some disconnect going on internally there with their training, I think.”

Just last month a Twitter engineer apologized in a string of tweets for harassment reports falling through the cracks of the company’s reporting system. The apology came after writer Sady Doyle posted a tweet about a troll with the handle @misogyny who’d once tweeted a threatening picture of a gun at Doyle. In her June tweet, Doyle posted screenshots showing that the troll had created a new account and bragged he’d “beat[en] the case” against him. Doyle then posted a screenshot of her plea to Twitter to ban the user alongside Twitter’s response — a form letter saying the account did not violate Twitter rules.

Doyle’s tweet was retweeted over 6,200 times. Shortly thereafter, Twitter reversed its ruling and suspended the user. On Twitter, Doyle’s followers lamented the company’s fickle enforcement. “Basically nothing is worth a ban, unless you get enough people tweeting about it,” one user replied.

The Twitter engineer followed with a sincere apology. “That's often because an employee spots it and internally escalates it. I hate seeing things like this; we have to do better. Sorry.”

Doyle tweeted back that she earnestly appreciated the escalation, but that — like many others — she was troubled by the need to have assistance from a third party.

“When this stuff happens to me, I have a platform, so it's easy to publicize — but other people aren't that lucky.”

Quelle: <a href="Twitter Is Still Dismissing Harassment Reports And Frustrating Victims“>BuzzFeed

Published by